Hundreds of deployments every day without tears

Challenge

The deployment process of Plaid’s engineering team was cumbersome. Because it was easier not to deploy software than deploying it, engineers were incentivized to bundle hundreds of pull requests into a single deployment. The result: stale services, slow feature releases, and an error-prone and painful software deployment process.

Solution

Build a system that enables canary deployments and health metrics checks with Argo Rollouts, Linkerd, and Nginx. Developers would get a clean, straightforward experience while gracefully rolling out workloads.

Impact

Plaid releases hundreds of times per day across hundreds of services. Merging to production takes less than 30 min — a significant improvement. They are now able to prevent state drift and catch configuration bugs previously only detectable in production.

By the numbers

Under 30 mins

From merging to production

100x releases per day

Across hundreds of services

Prevented 95

Downtime minutes in one year

Letting hundreds of deployments work every day without tears

Plaid is the engine behind the world’s most successful fintech applications, supporting over 12,000 banks globally. To achieve that, the engineering team releases hundreds of times per day across hundreds of services, with seamless automatic monitoring and rollbacks whenever needed. Cloud native technologies, and Linkerd in particular, helped Plaid achieve that. In this case study, we’ll explore how.

The Plaid engineering team

Plaid provides a computing infrastructure that allows users to access banking systems seamlessly. The Plaid platform eliminates common friction points, such as connecting with thousands of individual banks, each with its unique API. Instead, Plaid customers connect to the platform, and Plaid establishes that connection, ensuring a secure and reliable data exchange between financial institutions. It provides easy access to thousands of banks in the US, Canada, Europe, and Great Britain. Today, the world’s most successful fintech apps, including Venmo, Acorns, Betterment, Chime, and SoFI, rely on Plaid.

The fintech company has about 400 developers and 15 platform engineers responsible for around 250 services running on a dozen clusters distributed across all regions.

Deployments were slow and painful. Plaid needed a change!

The Plaid engineering team uses a monorepo with several hundred services. While creating changes that touch dozens of services is easy to write, deploying them everywhere was a huge endeavor. The result: stale services and slow deployments due to a lack of visibility on the developers’ side. That, of course, didn’t incentivize good deployment practices. Instead of lots of small deployments, hundreds of PRs were bundled into a single deployment.

Relying on manual processes, different for each service, developers would deploy blindly. And because of the manually initiated deployment process, some software got written but never deployed, while others got deployed accidentally. In short, deploying software was error-prone and a huge pain for everyone. It became clear the engineering team needed easy, reliable, automated deployments.

Plaid’s path to zero-touch deployments

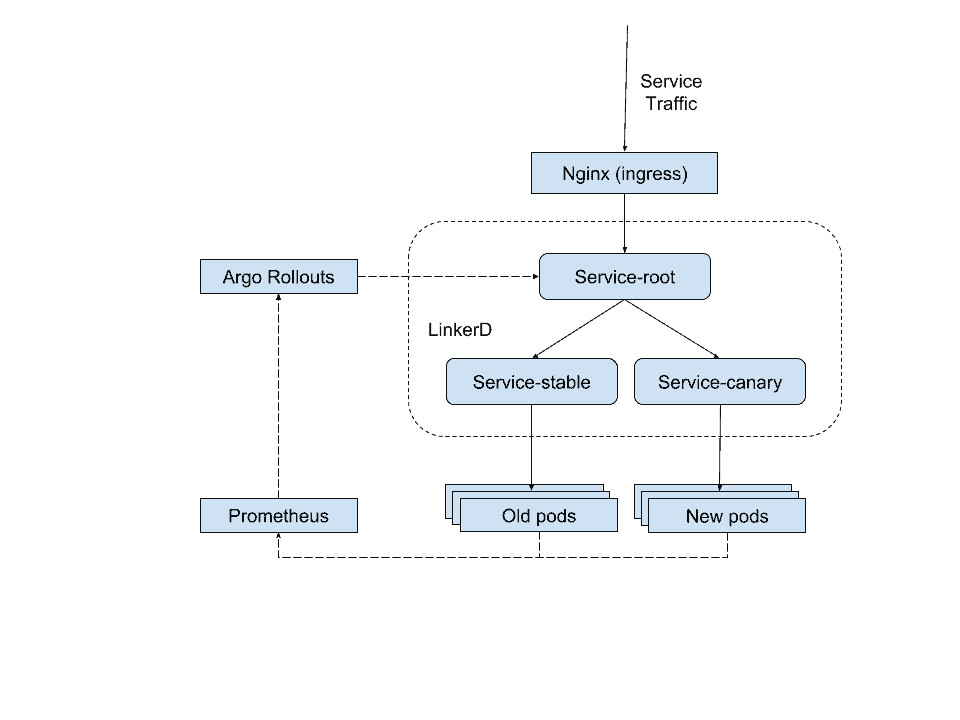

In 2021, Plaid started building a system enabling canary deployments and health metrics checks. It had three main components: Argo Rollouts, Linkerd, and Nginx.

They use Jenkins for their deployment pipeline. For the most part, developers don’t need to interact with the tooling, and they trust it to be able to do smooth deployments anywhere in the organization. They rely on Argo Rollouts’ integration with Linkerd to provide this capability, allowing Linkerd to shift traffic around. This allows the platform team to give developers a clean, straightforward experience while still being able to gracefully roll out workloads where Nginx wouldn’t be able to control them directly.

The diagram below shows data traffic represented as black and control traffic in dashed arrows. They use the Nginx ingress for traffic coming into the cluster, which handles the TLS termination. At the time, the Nginx traffic shifting was bare-bones. It worked but had to be configured to shift by pod count rather than individual percentage.

“Our main concern was to achieve high control over how traffic was routed in our Kubernetes cluster. Today, we’ve implemented a zero-touch deployments (ZTD) approach, deploying each change and monitoring for problems that require rollbacks.”

Mark Robinson, Infrastructure Engineer at Plaid

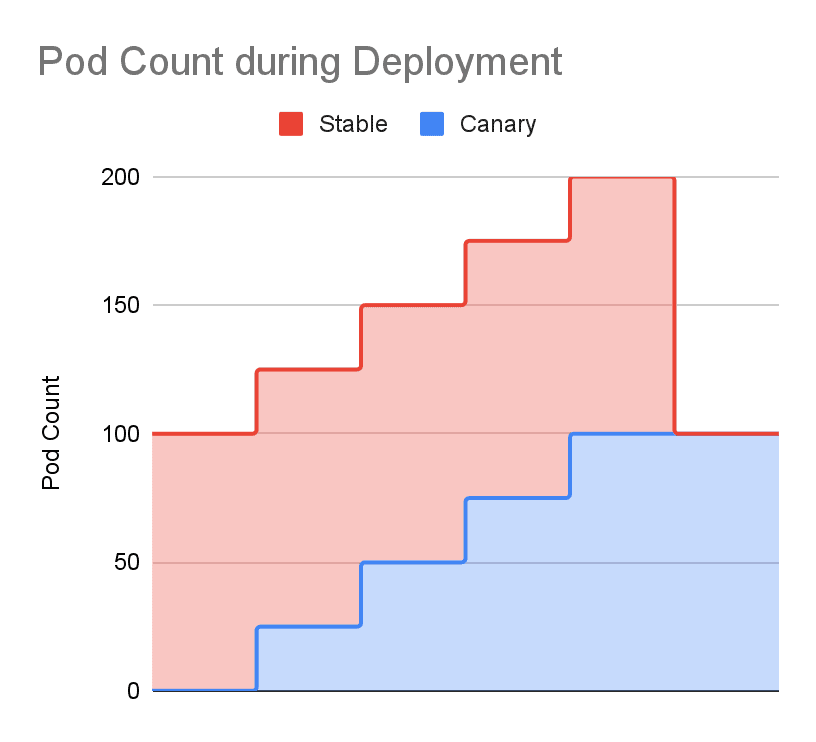

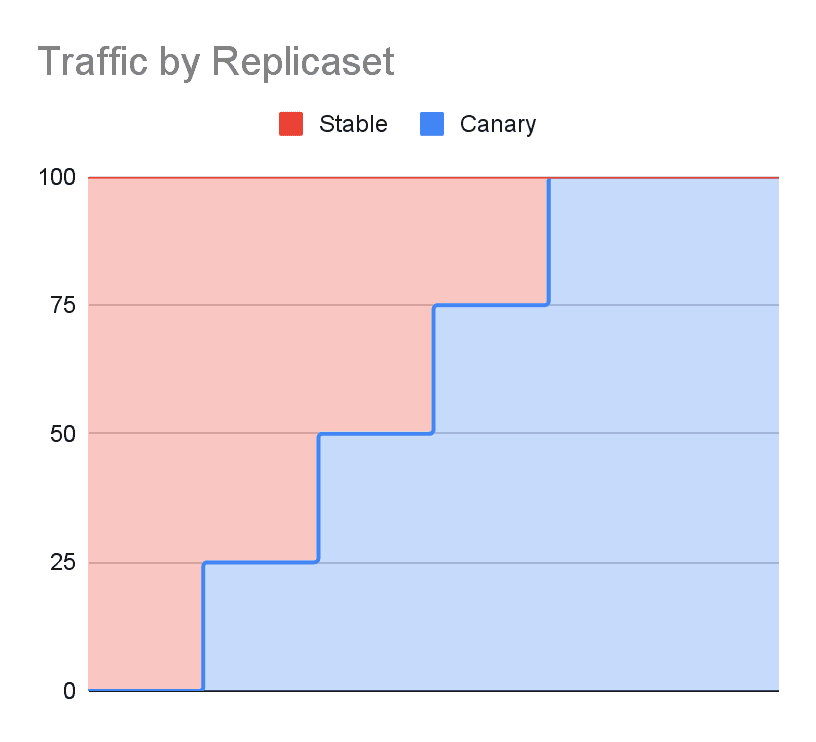

During a canary deployment, Plaid would spin up new pods while keeping the old ones running. This allowed for instant rollbacks. The LinkerD service mesh provided a very fine grained way to shift traffic to the new pods without terminating the old pods. These two images show pod count over time, and traffic distribution during the deployment.

Adopting a service mesh

Whenever Plaid’s engineering team adopts a new technology, they do extensive research. Their service mesh evaluation process was no different. They looked into Linkerd, Istio, and some other Envoy-based meshes. While they were able to get the other service meshes up and running, they were a lot more complex than Linkerd. Ultimately, they didn’t see sufficient benefit in accepting that operational overhead. Linkerd’s reliability and operational simplicity made the difference — it worked from the start.

The team had some simple Go services running in a matter of days. A little more difficult was figuring out what breaks when you turn on the mesh. They had to tweak some gRPC settings in Node, for example. But overall, it took them a few weeks to get everything sorted and to be able to start the main production migration.

Besides helping them to route traffic to specific services, they also encountered a very responsive and welcoming community. On a few occasions, they needed help, asked questions on the Linkerd Slack channel, and got answers fairly quickly. They submitted a few bug reports and a PR; the maintainers were always responsive.

Reducing friction and developer cycle time

Plaid’s new deployment process minimized friction and decreased cycle time. And that is business-critical for the company. After all, they are a tech company, and when there’s an issue or a feature request, they must move at a tech-industry pace. With hundreds of services and developers, that means supporting a self-service deployment pipeline that’s still safe enough for the financial sector.

“Today, deployments don’t require manual intervention, and we minimized the number of bugs that reach production by automatically rolling bad code back without human intervention,” explained Robinson. “We are all open source fans and use multiple CNCF projects, including Kubernetes, Cilium for our CNI, Helm for some installation management, Prometheus and Thanos for monitoring, and Argo Rollouts for CD.” They also use Nginx as their ingress and Atlantis (with Terraform) for infrastructure configuration.

Hundreds of releases per day across hundreds of services

Today, Plaid releases hundreds of times per day across hundreds of services, with seamless automatic monitoring and rollbacks if needed. For most developers, this means they can simply submit a pull request, and the new version of their service is active and running with no further attention from them. And they can do all that in less than 30 minutes from merging to production — across AZs, with no additional effort.

“We’re confident that what is in git is running in production. Preventing state drift has saved us from many problems. We’ve also caught several configuration bugs that would otherwise only be detectable in production,” stated Robinson.

They achieved that by using Linkerd’s Argo Rollouts integration, allowing for smooth, automated canary releases for every service, every time. Developers interact with Jenkins. It’s rare for them even to need to think about the mesh. The mesh just transparently provides everything Argo needs to do clean canary deployments anywhere in our system.

“This setup has been great. And the fact that we can safely deploy code later in the day or on weekends has reduced our team’s stress levels immeasurably.”

Mark Robinson, Infrastructure Engineer at Plaid