How cloud native technology helps Mux simplify online video streaming

Challenge

Mux aims “to make online video streaming easy for everyone,” says Co-founder Adam Brown, and its data and video workflows involve many moving parts. To manage that complexity, the company used Docker containers for its very first deployment in December 2015. Mux initially used the Rancher stack for orchestration, but ran into stability problems around networking and also needed a solution that would support multiple clouds.

Solution

Brown decided to migrate to Kubernetes, with the Mux team rolling it themselves.

Impact

Moving to Kubernetes “increased our productivity in how we manage and version deployments,” says Brown. “We’ve also been able to dynamically scale up and down very quickly to respond to very large live streaming events that have potentially millions of viewers.” The entire development workflow has been simplified; now, a development environment can be spun up in less than 15 minutes versus hours of setup previously, and rolling the entire cluster (with about 90 microservices) across multiple cloud regions takes less than an hour.

By the numbers

Development environment can be spun up in less than 15 minutes

90 microservices

Rolling the entire cluster across multiple cloud regions takes less than an hour

“Mux” is shorthand for multiplexing, or combining multiple signals into one in digital media.

And since it was founded in 2015, the startup named Mux has worked to combine everything needed to “make online video streaming easy for everyone,” says Co-founder Adam Brown. “Our goal is to remove the technical complexity from building a high quality streaming experience. We help automate decisions like codecs, encoding settings, and CDN distribution behind an easy-to-use API.”

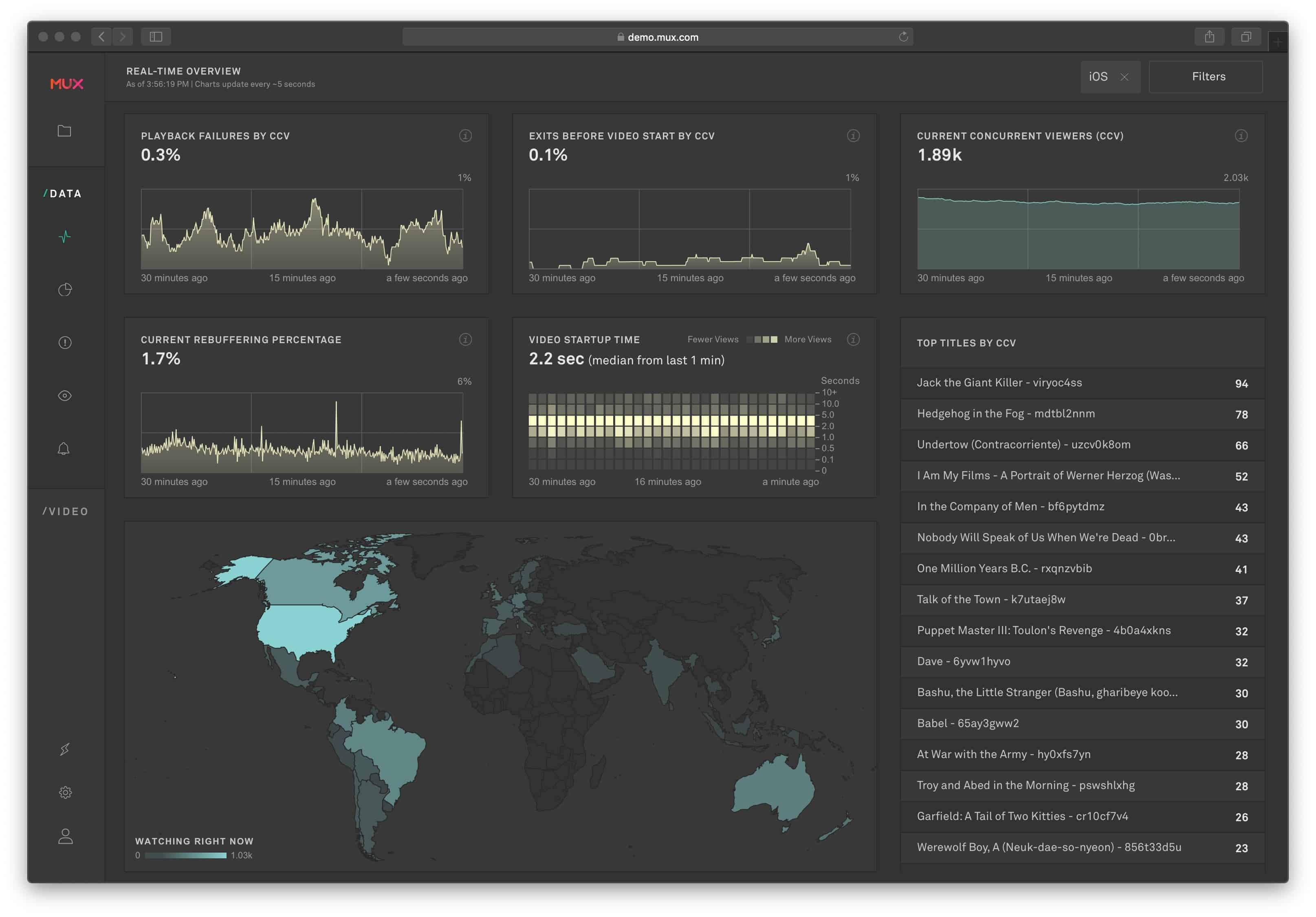

Mux’s two products are a data product that provides users Quality of Experience (QoE) metrics for their videos across video players around the world, and a video product that allows developers to easily build and work with video on demand or live streaming.

With both these workflows, especially the video one, “there’s a lot of moving pieces involved,” says Brown, who is head of technology and architecture at Mux. “We manage lots of very large files from various places around the globe, and we transcode a lot of files. Then there are data pipelines involved for making algorithmic decisions as to what transcoding parameters to use, what bitrates to produce, and what CDNs to distribute through.”

To manage that complexity, the company embraced containerization early on, using Docker containers for its very first deployment in December 2015. Initially, Mux used the Rancher stack to manage the containers. “It seemed like the easiest thing to get started with,” says Brown. “Kubernetes was young at that time, and there was a lot more complexity to get the cluster up and running.”

After a time, the company started having some stability problems around networking, and as the Kubernetes ecosystem matured, Brown revisited moving everything over to Kubernetes. He considered the various cloud provider-hosted, managed Kubernetes offerings, but ultimately went with having the Mux team run Kubernetes themselves.

“We run on multiple clouds, both Google and Amazon,” Brown explains. “One of the things that we were very concerned about early on was having a consistent environment across both our internal development and operations. We wanted the logging to work the same, the metrics to work the same. There were some technical challenges to get it to work the way we wanted to with the managed services due to the access that you had to the host level. So we ended up managing it ourselves across all clouds.”

Because everything—all builds and deployments and even the CI pipeline—was already done inside containers, the move to Kubernetes was straightforward. Since then, Mux has moved its entire build system to Bazel, “which has dramatically decreased build time and increased maintainability,” says Brown. Additionally, “we have moved to a new Starlark-based DSL for managing yaml generation, and have enhanced our development environment via integration with Tilt, which lets developers get up and running even more quickly and run the dev environment in the cloud or on their local machines with ease.”

“As far as our developers are concerned, when they’re writing software, even complex system software, they don’t have to think too much about what cloud it’s going to run in, and that has simplified our entire development workflow quite a bit.”

— ADAM BROWN, CO-FOUNDER AT MUX

Moving to Kubernetes had an immediate impact at Mux. “It’s increased our productivity in how we manage and version deployments,” he says. “We’ve also been able to dynamically scale up and down very quickly to respond to very large live streaming events that have potentially millions of viewers.”

The consistency across cloud providers that Kubernetes enables, Brown says, “allowed us to think about every cloud provider as purely compute resources. As far as our developers are concerned, when they’re writing software, even complex system software, they don’t have to think too much about what cloud it’s going to run in. And that has simplified our entire development workflow quite a bit.”

Now, a development environment can be spun up in less than 15 minutes versus hours of setup previously, and rolling the entire cluster (with about 90 microservices) across multiple cloud regions takes less than an hour.

Brown notes that Mux’s cloud native stack has also enabled the company to do some interesting things with its video streaming. “It’s very easy for us to iterate on our internal APIs and maintain compatibility,” he says. “That’s been a huge time savings.”

“There’s a lot of complexity that goes into serving a single request, and I don’t think we would have been able to do it as quickly or effectively without gRPC and Kubernetes.”

— ADAM BROWN, CO-FOUNDER AT MUX

Besides Kubernetes, Mux has implemented a number of other cloud native technologies, including Prometheus for all of its monitoring, Jaeger tracing with OpenTracing API, etcd, and gRPC. “We’ve embraced the powerful feature set of gRPC, including bi-directional streams,” he Brown says. “We’ve been really leveraging gRPC for almost all internal communication, including transporting video bytes internally.”

The company also uses the StackRox product for container scanning, runtime scanning, and runtime vulnerability detection. “The thing we liked so much about their product is that it was the easiest to drop in,” says Brown. “The other really nice thing is it’s quick to give you insights into triaging, what security problems to look at first. So you know if it finds vulnerabilities, it adds in other Kubernetes information like running in testing or production or exposure to the Internet. It’s good at ranking the overall severity and pointing you at something actionable quickly.”

Given the services that Mux provides to its customers, this cloud native stack has real business impact. “We have latency requirements, and one metric that we track internally is time to first byte—how quickly we can get video streaming out,” he says. “There’s a lot of complexity that goes into serving a single request, and I don’t think we would have been able to do it as quickly or effectively without gRPC and Kubernetes.”