LLMs and AI are everywhere these days. Everyone wants to build the next big thing, ship it fast, and maybe even cash out and chill for the rest of their lives. The problem? Most open source AI projects are shared as is. They’re created with the best intentions, but their developers aren’t losing sleep over things like security guardrails or production hardening, that part is left for you to figure out.

If that sounds like the boat you’re in, you’re in the right place. In this blog, we’ll look at how running AI on Kubernetes introduces some very real networking and security challenges, and what you can do about them before your experiment turns into a liability.

Because here’s the thing: AI systems are complicated, sometimes even the people building them admit they don’t fully know why a model makes the decision it does. But at the end of the day, it’s still just bits moving around computers and network cables, and securing those bits, whether they’re API keys, training data, or model endpoints, is easier than you might think if you know the right patterns.

Are these AI security challenges unique?

Yes and no. On the one hand, the issues AI workloads face, unrestricted network traffic, exposed endpoints, leaked credentials, are the same classic security problems we’ve been wrestling with in IT for decades. On the other hand, they don’t just move data around, they handle sensitive training datasets, expensive inference workloads, and powerful APIs that can be abused in seconds. A stolen API key doesn’t just mean a small breach; it could mean thousands of dollars in cloud bills or a model that starts spilling the beans for the wrong confidant. A compromised pod doesn’t just leak logs; it could expose proprietary datasets you spent months fine-tuning, erasing your competitive edge overnight.

So while the problems aren’t entirely new, the stakes are a lot higher. It’s like the difference between leaving your bike unlocked outside a café versus leaving a sports car running with the keys in the ignition. Both are risky, but one is going to attract trouble a lot faster. “Reverse the order if you are living in Amsterdam, or Vancouver its the bike right ;)”

Securing an AI system can feel like taming a “complex beast.” While developers focus on model training and application logic, critical security considerations are often overlooked. This is partly because open source AI projects are typically presented “as is,” or because the pace of change in these frameworks are faster than the speed of light, and that is why we get frameworks with no or limited built-in security guardrails for production environments.

When deployed in a default Kubernetes cluster, these AI workloads face significant risks:

- Uncontrolled Network Traffic: By default, all pods in a Kubernetes cluster can communicate freely with each other. This creates an open environment where a single compromised component can lead to lateral movement and wider system breaches.

- Sensitive Data Leakage: AI applications frequently handle sensitive API keys to connect with external services. Without proper handling, these keys can be “transferred between your users and platform” over unencrypted channels, vulnerable to eavesdropping.

- Unsecured Network Exposure: Exposing AI endpoints to users or other services without robust ingress security leaves them open to unauthorized access and potential attacks.

A blueprint for securing AI on Kubernetes

Securing AI on Kubernetes does not require reinventing the wheel. Instead, it involves applying “well known security best practices” from the cloud-native ecosystem using a purpose-built toolset. Examples in this blog reference common CNI implementations (such as Calico or Cilium) that provide frameworks to address the unique security challenges of AI workloads.

Securing ingress to your endpoints (secure the front door)

The first step in preventing API key eavesdropping is to lock down the entrypoint to your AI workloads. Many frameworks like Ollama, vLLM, or LiteLLM ship with a plain HTTP server out of the box, which means anyone in the path can snoop on your traffic if you’re not careful. When these frameworks run inside Kubernetes, securing their API endpoints with certificates is your first line of defense.

The good news? Kubernetes makes this easy. Using the `Gateway API` standard, you can create a gateway, attach certificates, and ensure your traffic is encrypted end-to-end. That way, sensitive keys and requests don’t travel in the clear text format.

If you’d like to dive deeper into secure gateways, click here.

Control the traffic (Sorry this cluster is RSVP only)

Next, think about what happens inside the cluster. By default, all pods can talk to each other, like an open office. One compromised pod, and attackers can roam freely. To fix this you need to use Kubernetes network policies, a standard way to secure namespaced resources within your cluster. But there is a catch, Kubernetes on its own doesn’t enforce these policies it relies on your CNI (policy engine) to enforce them. Keep in mind that each CNI will also come with some “unique sauce” that allows you to go beyond what standard network policy providers.

For example, some CNIs support Global Network Policies that secure both namespaced and non-namespaced resources. By implementing such policies suddenly, your cluster has a VIP list, and lateral movement is blocked.

If you’d like to learn more about securing your entire cluster, click here.

Cloud AI providers (watch the other doors)

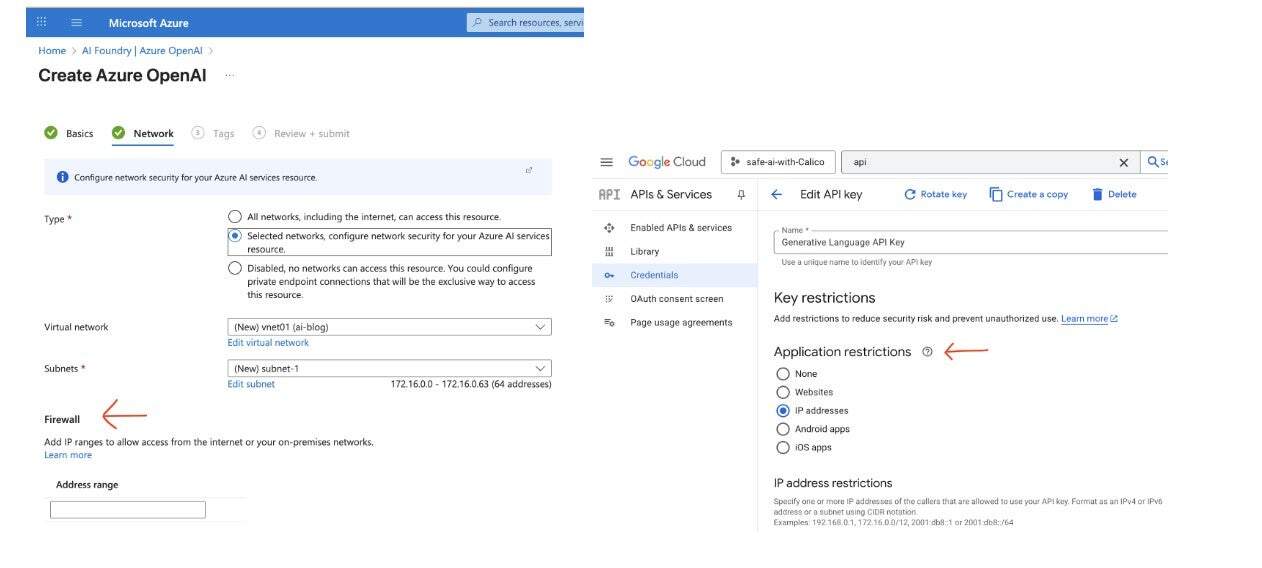

In some cases it might be “cheaper” to use Cloud providers instead of building your own AI apparatus, in such cases you should make sure that your API keys from these providers are not loosely configured and are actually tied to an identity that is only accessible to you. The easiest form of Identity that you can manage without having your developers to touch the code is IP address restrictions.

Managing outgoing traffic ( Network Address Translation )

At this point, you might be wondering, with Kubernetes pods having ephemeral IPs, how can you ensure your workloads present a consistent IP to cloud providers?

The answer comes down to your CNI of choice.

Depending on your CNI, you can configure Network Address Translation (NAT) to ensure workloads maintain stable, predictable IPs. You can even get granular with inclusions and exclusions, specifying exactly which interfaces or pods should use certain IPs. This gives you a reliable identity for external communications, critical for enforcing API key restrictions or ensuring cloud services recognize your workloads correctly.

Managing outgoing traffic ( Egress Gateways )

Egress gateways are another way to make sure which Identities should be used when communicating to an endpoint. An egress gateway is a configurable Pod, or node workload (depending on the CNCF based solution you have chosen) that gives you a more granular way to control your NATs.

For example, Calico Egress Gateway allows you to run pods that become an immediate gateway for your workloads and can be managed via independent policies and routes. This allows you to achieve routing isolation for pods that need to talk to AI providers and deny or redirect other pods to other gateways.

If you’d like to learn more about egress access within kubernetes, click here.

Observability (What is actually happening inside the house)

In the cloud, observability is everything. Traditional logging and perimeter security just don’t cut it anymore, they can’t explain why Kubernetes does what it does. Workloads in a distributed system can be running on different nodes, across clusters, or even in different regions. Without context, you’re basically flying blind, and that’s where traditional logging falls short and that is why the hottest topic in cloud native right now is OpenTelemetry, a free, open-source framework/standard that helps capture, logs traces, metrics from distributed systems and present it in a human readable way.

Modern CNI tools include observability features such as flow logs, service graphs, and context-aware visibility that show exactly how traffic flows between workloads, which policies are applied, and why. When combined with Grafana and Prometheus, these insights create a clear view into your Kubernetes cluster—turning “mystery connections” into actionable data and enabling a true zero-trust approach for AI workloads.

If you’d like to learn more about observability, click here.

The bottom line

While at the moment, AI can seem a new frontier, running into it without proper security is both dangerous and expensive. In this blog post we’ve gone through some simple security adjustments that can help you to secure your next bright idea in Kubernetes.