In recent years, the software industry has seen a strong shift toward enabling and supporting Artificial Intelligence (AI) workloads. While a variety of high level tools like Large Language Models (LLMs) already exist to support generic use cases, many domain specific solutions are either not yet available or come with significant development costs and risks, particularly when targeting more niche problems.

This raises an important challenge: how can we avoid building a fragmented AI tooling landscape with limited real world applicability?

To truly support the next wave of AI innovation, especially in cloud native environments, we need to rethink and reinforce our software component foundation. This includes standardizing formats, improving cross platform interoperability, and evolving Kubernetes with AI native features in mind. One of the most promising developments in this space revolves around Open Container Initiative (OCI) artifacts.

The role of OCI artifacts in the AI ecosystem

OCI artifacts enable users to store and distribute arbitrary files and metadata using OCI compliant container registries. While originally used for generic purposes (like ORAS supports them), they’re now finding critical roles in AI/ML workflows, especially with the rise of specifications like the CNCF ModelPack.

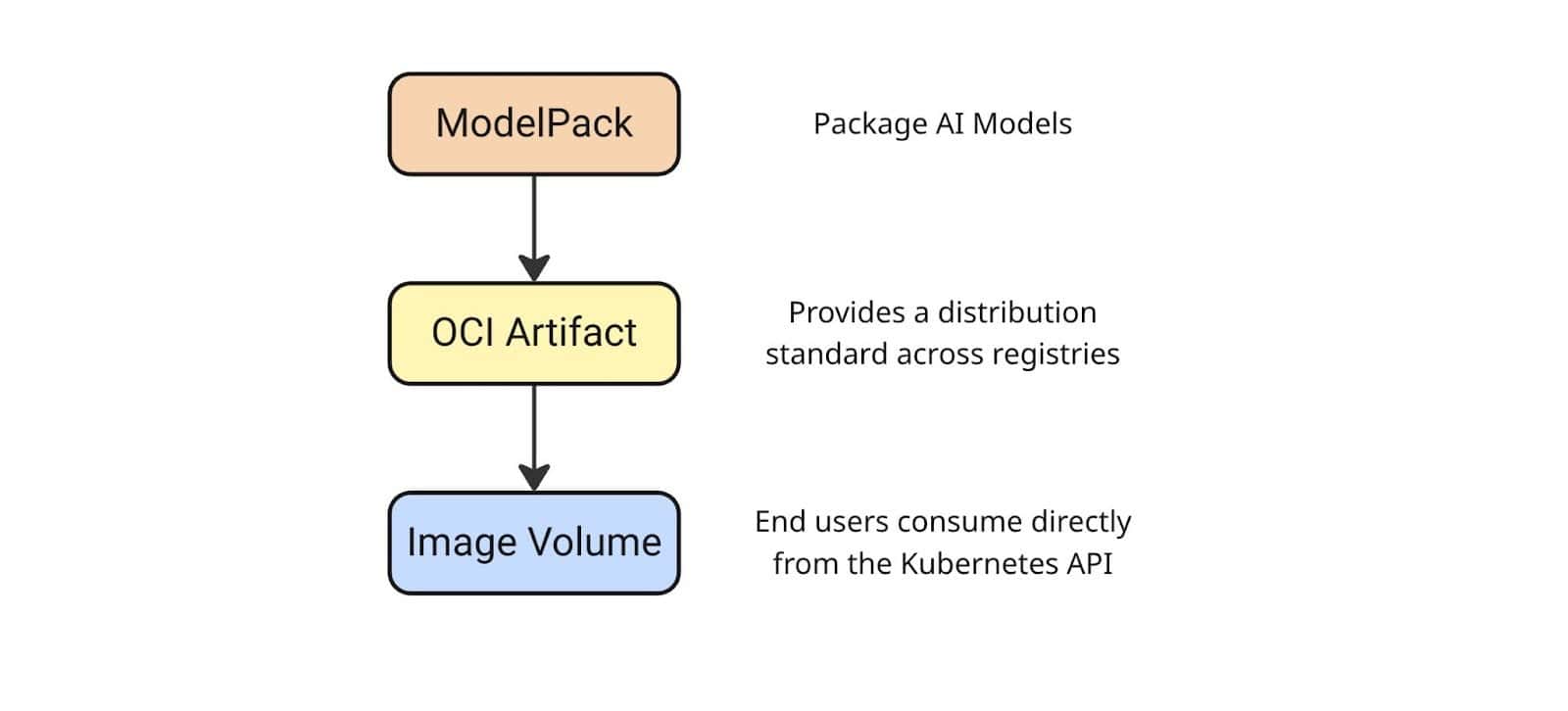

The CNCF ModelPack Specification builds on top of OCI artifacts and aims to standardize the packaging, distribution, and execution of AI models in cloud native environments. By moving away from proprietary formats, ModelPack facilitates reproducibility, portability, and vendor neutrality in machine learning workflows.

This opens the door to several important use cases:

- Standardized AI/ML model packaging: With OCI artifacts, models can be versioned, distributed, and tracked like container images. This promotes consistency and traceability across environments.

- Secure model delivery: Well established solutions like sigstore signatures offer mechanisms to sign and verify OCI artifacts, improving model integrity and trustworthiness. It also allows to scan artifacts like container images for vulnerabilities or other security issues.

- AI/ML model development: For example, it is possible to package ML pipelines as OCI artifacts reuse and sharing across teams. Configuration files can be packaed into artifacts for specific environments.

How Kubernetes and OCI Artifacts fit together

Having standards around OCI artifacts are essential for building robust AI features into Kubernetes. For example, the Kubernetes Image Volume feature allows pods to mount container image layers as read-only volumes. Image volumes could also support OCI artifacts as a special use case.

When combining Image Volumes with OCI artifacts, then this capability enables:

- Efficient sharing of large model files across containers.

- Network efficient deployment by leveraging unique and deduplicated layers.

- Fast development iterations with model variants that can reuse common base layers.

Tools such as modctl, part of the ModelPack ecosystem, provide a CLI interface to manage these models and layers effectively.

However, Kubernetes can not do all of this alone, the ecosystem, especially container runtimes like CRI-O, have to serve as the bridge between the low level OCI standards and high level Kubernetes features.

CRI-O and OCI Artifact support

Recent versions of CRI-O have added native support for OCI artifacts, storing them in a dedicated directory to keep them separate from traditional container image layouts. This enables a range of AI centric features:

- Mounting OCI artifacts as image volumes in Kubernetes.

- Attaching models, config files, or even assembling full container file systems from a collection of OCI artifacts.

One particularly compelling benefit is architecture independence. OCI artifacts can be platform agnostic, ideal for configuration files or other cross platform assets. And when architecture specific binaries are required, CRI-O also supports multi-architecture manifests (since v1.33). For example, the release bundle at ghcr.io/cri-o/bundle:v1.33.3 allows the runtime to automatically select the appropriate architecture variant from the multi architecture manifest list.

CRI-O is also exploring support for WebAssembly (Wasm) binaries stored as OCI artifacts, which is currently supported through the crun runtime. This could further expand the range of artifact based use cases across lightweight and portable compute platforms.

Despite the progress, OCI artifacts are still catching up in terms of feature parity with traditional container images. For instance:

- Signature validation via sigstore is not yet fully supported, though it is on the roadmap.

- Compressed artifact layers, a feature that would benefit workloads dealing with large, compressible files (like datasets or logs), are also planned for the future.

- Support for read-write image volume mounts to be less restrictive without compromising runtime security would help to simplify distribution setups.

As these capabilities mature, OCI artifacts will become an even more essential part of building scalable, secure, and portable AI systems.

Help to shape an AI native Kubernetes

The Kubernetes community is actively working to formalize AI support through new working groups (WGs) focused on various aspects of this transformation:

- WG AI Conformance: Defining what AI support should look like across conformant Kubernetes implementations.

- WG AI Integration: Focusing on integrating AI/ML components into the broader Kubernetes ecosystem.

- WG AI Gateway: Exploring service oriented interfaces for model serving and inference workloads.

These initiatives highlight the growing importance of AI workloads in cloud native environments. If you’re passionate about Kubernetes and AI, now is a great time to get involved.

Thanks for reading! If this topic resonates with you, consider contributing to the discussions, proposing new features or improvements. If you would like to chat about that, feel free to directly reach out to me directly via the Kubernetes Slack.