The community was just getting started with k0rdent v0.3.0 that we pushed a surprise for them to cherish with a super quick and very special release.

We’re excited to announce k0rdent v1.0.0, a milestone release that solidifies our vision for unified observability and cost optimization in multi-cloud environments. Driven by the k0rdent Cluster Manager (KCM) and enhanced k0rdent Observability & FinOps (kOF) capabilities, this release brings production-grade stability, powerful new features, and significant operational improvements to help platform engineering teams reduce complexity, gain visibility, and control infrastructure costs at scale.

For a recap, k0rdent is a Kubernetes-native distributed container management environment (DCME) that helps platform engineers manage infrastructure at scale. Acting as a “super control plane,” it enables centralized, template-driven lifecycle management of clusters and services across on-prem, cloud, and hybrid environments. Built on open standards, it simplifies the creation of secure, consistent Internal Developer Platforms (IDPs) for modern workloads.

Core Components of k0rdent as you know,

- KCM (Cluster Manager): Manages lifecycle, upgrades, and scaling of Kubernetes clusters via Cluster API

- KSM (State Management): Deploys and manages services (e.g., Istio, Flux, cert-manager) using templated, declarative ServiceTemplates

- kOF (Observability & FinOps): Provides metrics, logging, dashboards, and cost visibility through integrations with VictoriaMetrics and OpenCost

Documentation: QuickStart Guide

Source Code: GitHub

Key Highlights in v1.0.0

The key highlights of version1.0.0 include:

- Stabilized v1beta1 APIs across all components

- Production Grade Multi-Cluster Support

- Enterprise-ready service templating and orchestration

- Integrated observability for GPU and Kubernetes control plane metrics

The idea behind this release was to focus on new capabilities:

Enhancements to k0rdent cluster manager (kcm)

- ServiceTemplateChains: Enable conditional, ordered service deployments with defined upgrade paths ([#1433])

The previous design of ServiceTemplateChains fell short in enabling effective upgrade path management for services on managed clusters. Key limitations included ambiguous chain selection when multiple chains reference the same template, lack of visibility into upgrade availability, and no distinction between sequential and direct upgrades. Additionally, the absence of versioning and unclear relationships between core resources hinder upgrade validation and system predictability. Addressing these gaps were essential for delivering safe, transparent, and manageable service upgrades at scale.

To improve clarity and control in service upgrades, we are introducing a structured resource hierarchy—CD / MCS → ServiceTemplateChain → ServiceTemplate—alongside versioning for ServiceTemplates to define explicit upgrade paths. Additionally, environment-specific upgrade strategies can now be modeled using distinct ServiceTemplateChains, enabling safer and more predictable rollouts across diverse infrastructure contexts.

- Global Values: The ability to share reusable variables across templates to support consistent multi-cluster configurations ([#1535])

We tested the installation and AWS cluster creation with/without global values. All templates rendered via helm template and checked that all were rendered correctly. If global values aren’t set the template will behave exactly the same as before these changes.

We didn’t touch templates where this makes no sense: managed Kubernetes (like EKS) clusters or adopted clusters.

- IPAM Controller Integration: Automate IP address management across heterogeneous environments ([#1260])

The goal was to deliver a solution that automates IP address allocation and DNS record updates using Cluster API’s In-Cluster IPAM Provider. This solution aims to reduce manual intervention, ensure network consistency, and improve overall operational efficiency. It would benefit stakeholders including Platform Leads, network operations managers, and security teams from reduced manual tasks, improved accuracy in network records, and greater operational efficiency.

The major deliverables were:

- Automated IP Allocation: A solution that assigns IP addresses based on approved business rules and policies, reducing manual effort.

- Automated DNS Updates: A process that ensures DNS records are updated accurately and on time when IPs are allocated.

- Reusable ServiceTemplate: A clear, declarative template that encapsulates the business logic for IP management.

Assumptions:

- The underlying infrastructure supports the proposed automation.

- Existing business policies for IP allocation are clear and can be effectively translated into the solution.

Limitations:

- The initial release will focus solely on one automated IPAM solution.

- Customization is limited to the predefined business rules and policies.

- More advanced network or DNS configurations will not be included in this phase.

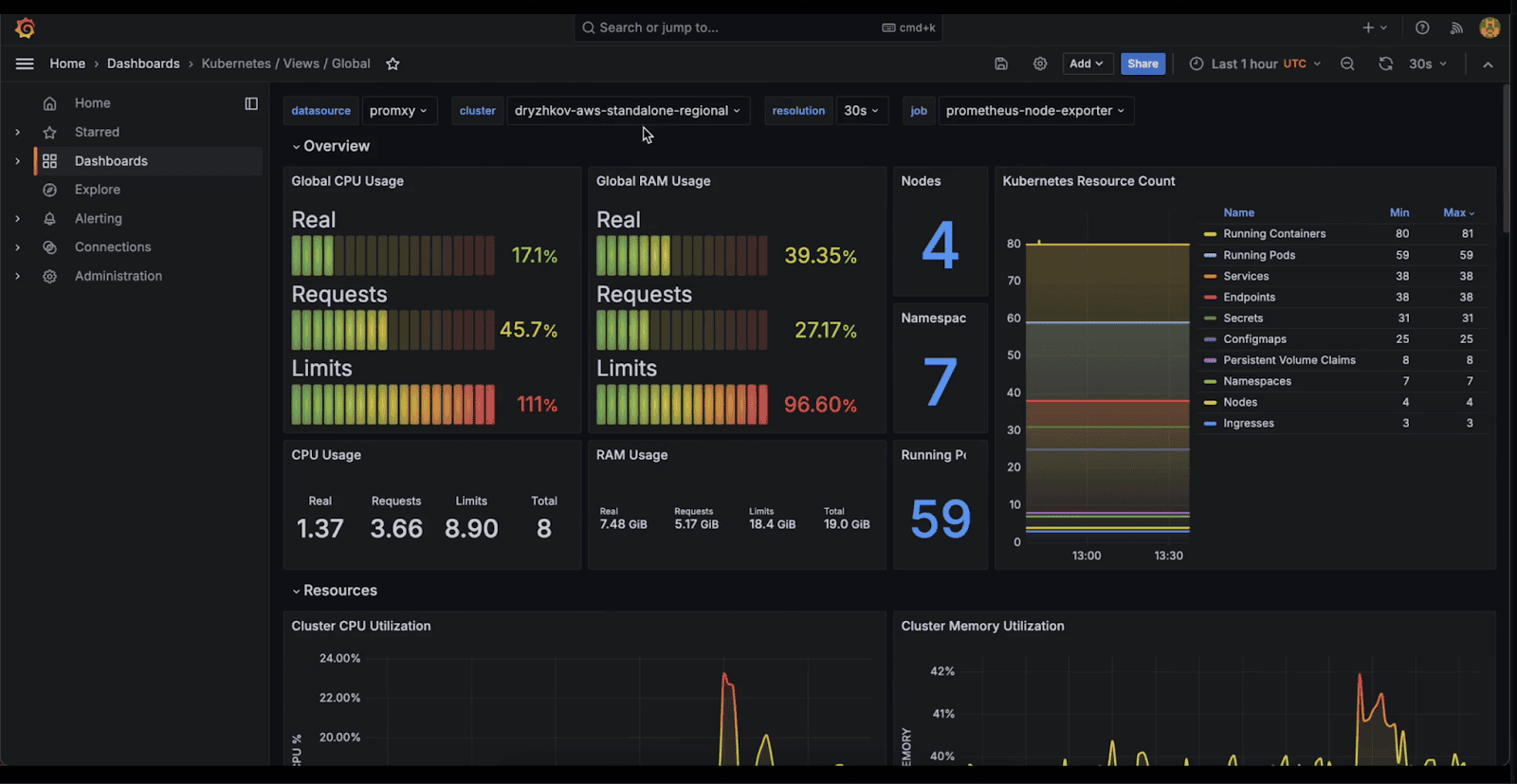

k0rdent Observability & FinOps (kOF)

As organizations scale Kubernetes operations across cloud and on-prem environments, observability and financial accountability become critical. The k0rdent Observability & FinOps (kOF) module is purpose-built to address these needs by integrating robust metrics, logging, and cost visibility into the k0rdent platform.

The kOF repository delivers a complete, composable observability stack with first-class support for multi-cluster monitoring, GPU utilization tracking, control plane health visibility, and Kubernetes-native FinOps reporting using open standards.

It builds on industry-leading tools such as:

- VictoriaMetrics for scalable, long-term time series metrics and logging

- Grafana for customizable, pre-configured dashboards

- OpenCost for Kubernetes cost monitoring and FinOps alignment

- OpenTelemetry for structured observability of control plane components

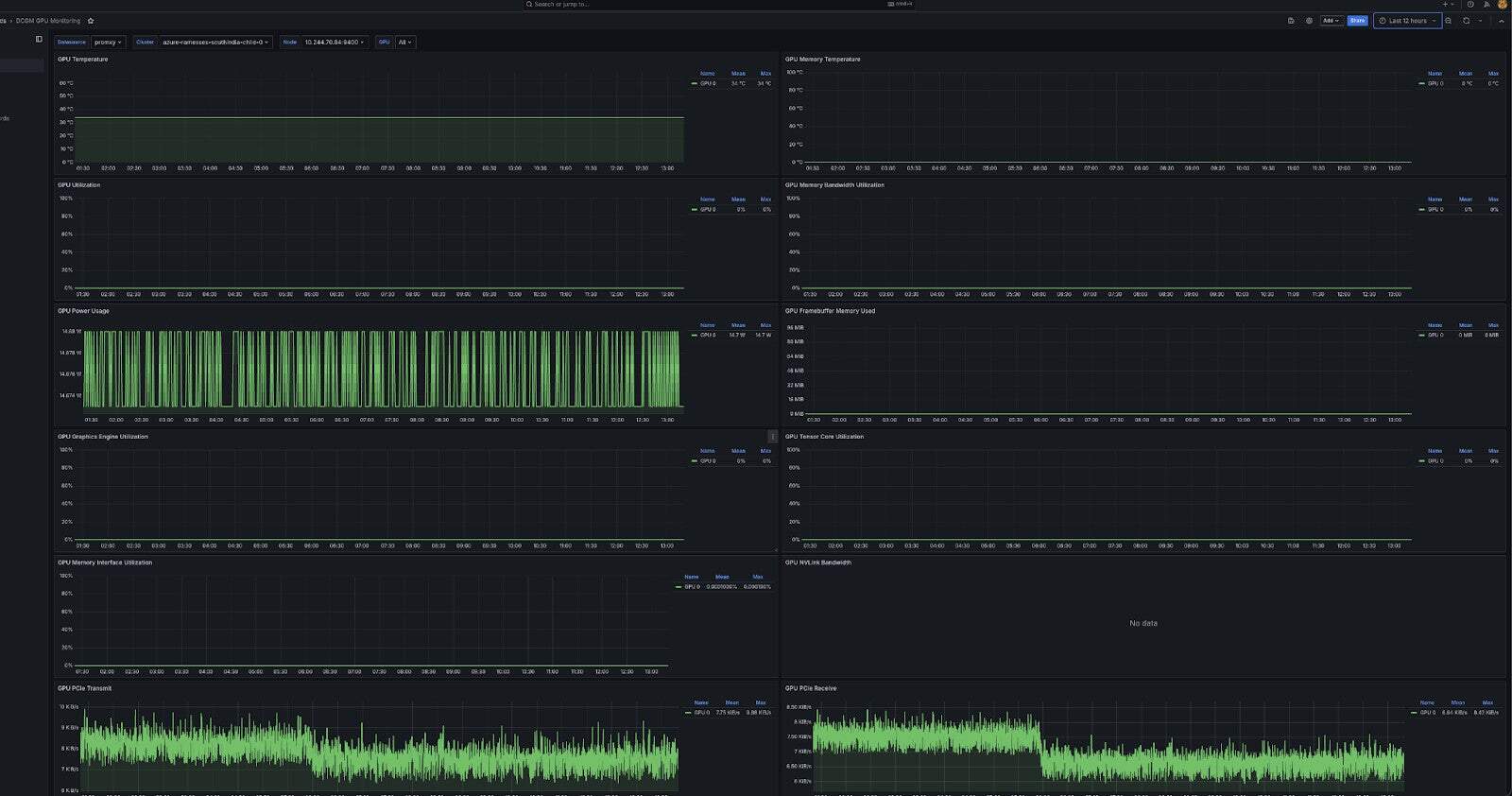

1. NVIDIA GPU Monitoring

Out-of-the-box Grafana dashboards provide real-time GPU utilization metrics for workloads running on NVIDIA-powered nodes. This is especially useful for AI/ML environments where GPU resources must be carefully tracked and optimized.

The dashboard is based on the official NVIDIA dcgm-exporter with few additional template key inherited from other existing dashboards in the repo.

The dashboard assumes that the DCGM Exporter metrics are being scraped. This requires a ServiceMonitor resource to be present, which directs to the DCGM Exporter’s metrics endpoint.

Note – While the NVIDIA GPU Operator deploys the DCGM Exporter, it does not, by default, create a ServiceMonitor resource for it.

Feature reference: [#257]

2. Refactoring API Metrics

In this new release for kof, the Kubernetes API metrics collection has been refactored and the misconfiguration of the Prometheus receiver has been fixed, this is a small step towards the unification with opentelemetry-kube-stack helm chart collectors.

The changes made include:

- Moving kubernetes-api metrics collection to a service monitor

- Removing prometheus receiver from cluster collector

Feature reference: [#259]

3. Advanced Logging

The community feedback was to ensure that log storage should be highly available and scalable.

VLS used is a cool tool, but it does not have a clustered version (yet), so I suggest we provide a layer of configuration to allow us to deploy other solutions such as OpenSearch/Loki

this would come handy with an intermediate buffer for logs (#177) against which we can run a “fanout” mechanism such as kafka connectors/logstash, thus making it possible to add any specific storages that users might need easily.

UPDATE: Use https://docs.victoriametrics.com/victorialogs/cluster/

With support for VictoriaLogs in clustered mode, kOF enhances log management performance, retention, and query scalability. The transition from victoria-logs-single to victoria-logs-cluster introduces support for multi-tenant log storage and long-term archival.

Feature reference: [#274]

4. Per-Cluster Configuration via Annotations

Kof users were trying to set up labs (with a lot of users) and installing self-signed certs was a normal part of setting up quick labs, which was expected to be done by the community too at some point.

So we needed the ability to configure tls_skip_verify for the promxyservergroup and grafanadatasource objects.

We were passing HTTPClientConfig here which included TLSConfig.InsecureSkipVerify here but there really was no way to pass it nicely via values, and the same for GrafanaDataSource.

New PR[#276] allows per-cluster customization of HTTP client parameters for Promxy and Grafana data sources using Kubernetes annotations. This helps fine-tune cross-cluster communication and security settings.

5. Resource Configuration and Cost Controls

Administrators can customize CPU and memory limits for observability stack components to ensure consistent performance across heterogeneous environments and optimize infrastructure costs.

Feature reference: [#263]

FinOps Integration: OpenCost

The kOF module integrates OpenCost, a CNCF sandbox project, to bring transparent cost visibility directly into Kubernetes-native workflows. This enables platform teams and finance stakeholders to:

- Analyze cost by namespace, workload, or label

- Correlate resource usage with actual spend

- Drive cost optimization decisions with accurate data

The image below is a diagrammatic representation of kof indicating what is happening in a regional cluster:

GitOps-Ready and Enterprise-Compatible

The kOF Helm charts and manifests are fully compatible with GitOps workflows, including ArgoCD and FluxCD. Configurations are declarative and support secure delivery pipelines across environments.

Stability and Reliability Improvements for v1.0.0:

- Resolved CR reference issues in IPAM and OpenStack providers ([#1522], [#1496])

K0rdent had the need to implement the IPAM controller which would reconcile ClusterIpamClaims and produce ClusterIpams which would reference IPAddressClaims.

As mentioned in Issue [#1198]

- Enhanced end-to-end test reliability and configuration handling ([#1517], [#1463])

- Improved Helm chart resource tuning for VictoriaMetrics services ([#279])

- Fixed Helm repository compatibility and remote secret issues for Istio ([#282], [#270])

Upgrade Notes

- v1alpha1 APIs remain available in this release but are now officially deprecated. Users should migrate to v1beta1 versions of ClusterTemplate, ServiceTemplate, and ProviderTemplate resources.

- All custom tooling should be updated to accommodate v1beta1 as support for v1alpha1 will be removed in a future release.

- It is recommended to back up the management cluster using Velero prior to upgrading.

- A detailed upgrade guide is available at Upgrade Documentation.

Deprecations and Known Issues

Deprecated

- All v1alpha1 APIs

- The legacy victoria-logs-single logging backend (migrate to victoria-logs-cluster)

Known Issues

- Grafana dashboards may take up to 60 seconds to initialize following cluster deployment

- Conflicting priorities in MultiClusterService definitions may require manual resolution

- IPAM reconciliation may be delayed in high-latency network environments

- Velero restores across cloud providers may require exclusion of certain resources (see documentation for guidance)

Release Metadata

| Key | Value |

|---|---|

| Helm Charts | kcm: 1.0.0, kof: 1.0.0, ksm: 1.0.0 |

| OCI Registry | ghcr.io/k0rdent |

| SBOM | Not included in OSS |

| OCI Signature Support | Enterprise only |

| Release Tags | v1.0.0 across all components |

🙌 Contributors

Huge thanks to the following contributors for making this release possible:

@gmlexx, @denis-ryzhkov,

@ramessesii2, @aglarendil,

@kylewuolle, @a13x5,

@eromanova, @zerospiel,

@BROngineer, @cdunkelb

Component & Provider Versions

| Component / Provider | Version |

|---|---|

| Cluster API | v1.9.7 |

| CAPI Provider AWS | v2.8.2 |

| CAPI Provider Azure | v1.19.4 |

| CAPI Provider Docker | v1.9.6 |

| CAPI Provider GCP | v1.8.1 |

| CAPI Provider Infoblox | v0.1.0-alpha.8 |

| CAPI Provider IPAM | v0.18.0 |

| CAPI Provider k0smotron | v1.5.2 |

| CAPI Provider OpenStack (ORC) | v0.12.3 / v2.1.0 |

| CAPI Provider vSphere | v1.13.0 |

| Project Sveltos | v0.54.0 |

Learn More

Join the k0rdent Community

Platform engineers today face increasing demands—but they don’t have to tackle them alone. k0rdent is 100% open-source and community-driven, offering the flexibility, tools, and ecosystem needed to manage distributed infrastructure efficiently.

Built by an international team of passionate developers, k0rdent thrives on collaboration. We welcome contributions and ideas to expand and improve the project.

Get Involved:

⭐️Drop a star to support the k0rdent project

✅ Explore the k0rdent Community repo on GitHub

✅ Join the #k0rdent channel on CNCF Community Slack (Sign up for CNCF Slack, then join #k0rdent)

✅ Sign up via our Community Invitation Form to attend Team k0rdent’s regular Office Hours

Be part of the movement—let’s build the future of Kubernetes-native infrastructure together!

Getting Started

You can try out k0rdent v1.0.0 today by following the QuickStart guide and deployment instructions. For feedback, questions, or support, please reach out via email, Slack, or GitHub Issues. We look forward to hearing about your experience.