Argo Workflows is a powerful open-source container-native workflow engine designed to automate CI/CD processes by defining them as custom resources. It allows for the creation of multi-step workflows where each step runs within a container, enabling the modeling of tasks as sequences or as a directed acyclic graph (DAG) to manage dependencies. This capability makes Argo Workflows particularly well-suited for running compute-intensive jobs, such as those found in machine learning or data processing, efficiently on Kubernetes.

If you’re just getting started with GitOps or CI/CD pipelines in Kubernetes, Argo Workflows offers a powerful and Kubernetes-native way to automate your build pipelines.

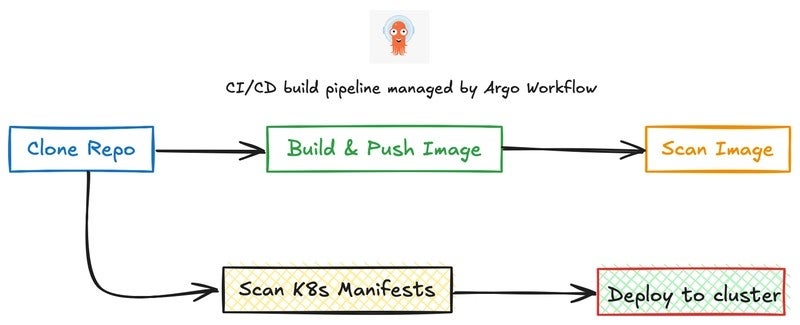

In this blog, we’ll walk through a complete Continuous Integration (CI) and Continuous Deployment (CD) pipeline using Argo Workflows that performs the following steps:

🔁 **The Overview of Pipeline:**

> ✅ Clone a GitHub repository

> 🔧 Build and push a Docker image using BuildKit

> 🔐 Scan the Docker image for vulnerabilities

> 🔍 Scan Kubernetes manifests for misconfigurations

> ⏭ Deploy Kubernetes manifests to your cluster

Let’s break down each part of the workflow. But, before doing so, let’s first understand basics of my Argo Workflow template.

⚙️ **Understanding the Argo Workflow Structure**

Argo Workflows is a Kubernetes-native workflow engine where each CI/CD process is defined as a custom resource (Workflow or WorkflowTemplate). In my case, I used a WorkflowTemplate named ci-build-workflow, which can be triggered manually or automatically through a CronWorkflow.

The workflow is defined using Argo’s CRD (Custom Resource Definition), and it includes metadata such as name or generateName. This value is used as a prefix for naming the pods in which each workflow step (or template) runs.

📦 **Volume Handling**

Just like regular Kubernetes pods, you can declare volumes in Argo workflows. These volumes—like work or buildkitd—are mounted across different steps, enabling shared storage between containers. This is especially useful for tasks that rely on common directories (like cloning a repo and building from it).

🔁 **Parameterized Arguments**

Argo allows the use of parameters in workflows to make them dynamic. In my CI workflow, I’ve defined parameters such as the GitHub repo owner, repo name, Docker image tag, registry name, etc. These parameters can be passed into the workflow during runtime or set statically in a CronWorkflow.

🧩 **Workflow Entry Point: DAG Template**

- name: main

dag:

tasks:

- name: clone-repo

...

- name: build-image

...

depends: clone-repo

- name: scan-image

...

depends: build-image

- name: scan-k8s

...

depends: clone-repo && build-image

- name: deploy-kubernetes

...

depends: build-image && scan-k8s && clone-repoThe pipeline uses a DAG (Directed Acyclic Graph) to run tasks in order:

– `scan-k8s` and `build-image` both run after cloning

– `scan-image` runs after the image is built

– `deploy-kubernetes` runs after the image is built, k8s manifests scanned and cloning a repo.

This setup ensures that tasks are run in the correct order without unnecessary blocking.

📂 **Volumes & Storage**

The workflow uses a shared `Persistent Volume Claim` (PVC) to exchange files between steps:

volumeClaimTemplates:

- metadata:

name: work

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 64MiAdditionally, `BuildKit` uses an `emptyDir` volume for its internal state:

volumes:

- name: buildkitd

emptyDir: {}Before we can build and push container images to a Docker registry like Docker Hub, we need to authenticate. Argo Workflows uses Kubernetes secrets to securely store and access these credentials during execution.

In our workflow, the build and push steps rely on credentials to authenticate with Docker Hub:

volumes:

- name: docker-creds

secret:

secretName: '{{inputs.parameters.docker_secret_name}}'

items:

- key: .dockerconfigjson

path: config.jsonThis volume mount makes your Docker config file available inside the container at runtime, which is required for tools like BuildKit to push images securely.

_How to Create the Docker Secret:_

To generate the .dockerconfigjson file, you can create the Kubernetes secret using:

kubectl create secret generic docker-config \

--from-file=.dockerconfigjson=$HOME/.docker/config.json \

--type=kubernetes.io/dockerconfigjsonIn the workflow, we refer to this secret using:

- name: docker_secret_name

value: docker-configWith the secret in place, your workflow can securely authenticate and push the built image to Docker Hub, completing the CI loop without exposing any sensitive information.

Now, let’s understand every template involved in this case.

📦 **Step 1: Cloning the Repository**

- name: clone-repo

template: clone

arguments:

parameters:

- name: owner

value: cloud-hacks

- name: repo

value: argocd-io

- name: ref

value: main

- name: clone_path

value: /workWe’re using the official `alpine/git` image to shallow-clone a GitHub repository. It stores the code in a shared volume (/work) so later steps like build and scan can use it.

🛠️ **Step 2: Building and Pushing the Docker Image**

- name: build-image

template: build-image

arguments:

parameters:

- name: image

value: example/nginx

- name: path

value: .

- name: version

value: v4

- name: registry

value: docker.io

- name: docker_secret_name

value: docker-config

- name: insecure

value: "false"

depends: clone-repoWe use the rootless BuildKit container (moby/buildkit) to build the Docker image. It reads the source from /work, builds the image, and is set up to push the image (when `push=true` is enabled in `–output`). We also provide:

The Docker image name and version

Docker registry credentials via a Kubernetes secret

Secure or insecure registry flag

Note: In this template, `push=false` is set — if you want to push, change it to `push=true`.

🐛 **Step 3: Scanning the Image with Trivy**

- name: scan-image

template: scan-image

arguments:

parameters:

- name: image

value: example/nginx:v4

- name: severity

value: CRITICAL,HIGH

- name: exit-code

value: "0"

depends: build-imageHere, we use Trivy to scan the Docker image (example/nginx:v4) for known vulnerabilities. This helps catch issues before the image is deployed.

We scan for CRITICAL and HIGH severity vulnerabilities

`exit-code: 0` ensures the workflow doesn’t fail even if vulnerabilities are found (customize this as needed)

Trivy pulls the image from Docker Hub, so make sure the build-image step pushes the image first.

🛡 **Step 4: Scanning Kubernetes Manifests with Kubescape**

- name: scan-k8s

template: scan-k8s

arguments:

parameters:

- name: path

value: /work/dev

- name: verbose

value: "true"

depends: clone-repo && build-imageWe’re using `kubescape` to scan the Kubernetes YAML files located in `/work/dev` for misconfigurations, policy violations, and security issues. This helps ensure that the manifests adhere best practices.

⚙️ **Step 5: Deploy Kubernetes manifests using Kubectl**

- name: deploy-kubernetes

inputs:

parameters:

- name: path

- name: namespace

container:

image: bitnami/kubectl:latest

command: [sh, -c]

args:

- |

echo "Deploying Kubernetes resources from {{inputs.parameters.path}}..."

kubectl apply -f {{inputs.parameters.path}} -n {{inputs.parameters.namespace}}

volumeMounts:

- mountPath: /work

name: workWe use official `bitnami/kubectl` image. It expects a path (like `/work/dev`) where my Kubernetes manifests are located.

The container mounts the shared work volume that was used in the clone step, ensuring that the files are accessible.

Once executed, it runs `kubectl apply` on the provided path to deploy the resources.

🔒 **Note**

> Make sure that your Argo Workflow controller has the correct RBAC permissions to interact with Kubernetes resources (like pods, deployments, services, etc.) in the namespace where you intend to deploy.

Here’s what we achieved so far using Argo Workflows:

Stage Tool Purpose

Clone Repo alpine/git Fetch source code

Build Image BuildKit Build Docker image in a secure way

Scan Image Trivy Identify vulnerabilities in Docker image

Scan Manifests Kubescape Catch Kubernetes YAML issues

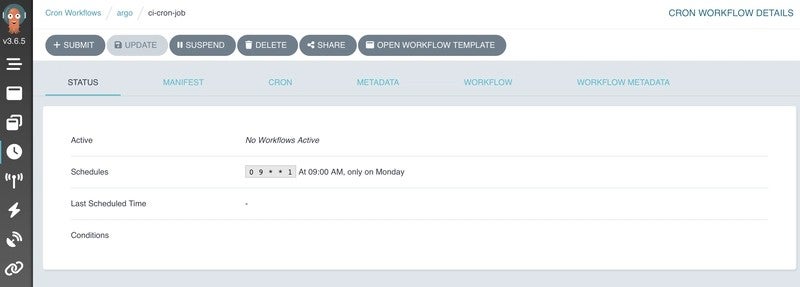

Deploy Kubernetes Kubectl Deploy kubernetes resources 🕒 **Automating CI with CronWorkflow**

To make the CI process completely hands-off, I added a CronWorkflow that runs every Tuesday at 9 AM UTC. This means our CI pipeline automatically triggers once a week without needing any manual input.

This is particularly useful for:

– Automatically building and scanning your base images weekly.

– Ensuring your Kubernetes manifests stay compliant.

– Catching vulnerabilities on a routine basis, even if there are no recent code changes.

Here’s what the CronWorkflow spec looks like:

spec:

schedule: "0 9 * * 2" # Every Tuesday at 9 AM UTC

timezone: "UTC"

concurrencyPolicy: "Replace" # If the previous run is still running, replace it

successfulJobsHistoryLimit: 3

failedJobsHistoryLimit: 1

workflowSpec:

workflowTemplateRef:

name: ci-build-workflowWith this in place, the entire CI process—from cloning the repo, building and scanning the image, to pushing it—is performed weekly without requiring any developer to trigger the pipeline.

As with all emerging tools out there, everything has to start somewhere and this is also the case with the Argo dashboard. While minimal, it does exactly what it needs to do. Argo shows all the workflows and their steps, it updates automatically and all progress and logs can be viewed from here. This makes it very easy to monitor how everything is going.

Find the complete code and configuration for this setup on GitHub:

GitHub Repository Link CI-build-Workflow: https://github.com/Cloud-Hacks/argo-wf/blob/main/quick-start/wf-ci-workflow.yaml

GitHub Repository Link CronJob Example: https://github.com/Cloud-Hacks/argo-wf/blob/main/quick-start/wf-cronjob-ci.yaml

**References**

🌟 Let’s Connect!

I love sharing insights about DevOps, Kubernetes, and GitOps tools like ArgoCD. If you found this article helpful or have questions, let’s continue the conversation on LinkedIn!

👉 Connect with me on [LinkedIn](http://linkedin.com/in/afzalansari07/)