Guest post originally published on the Magalix blog by Mohammed Ahmed

This article is part of our Open Policy Agent (OPA) series, and assumes that you are familiar with Kubernetes and OPA. If you haven’t already done so, or if you need a refresher, please have a look at the previous articles published in this series.

Today we are going to use OPA to validate our Kubernetes Network Policies. In a nutshell, a network policy in Kubernetes enables you to enforce restrictions on pod intercommunication. For example, you can require that for a pod to be able to connect to the database pods, it must have the app=web label. Such practices help decrease the attack vector in your cluster. However, a policy is only as good as its implementation. If you have a well-crafted network that lives in its YAML file and was not applied to the cluster, then it’s useless. Similarly, if important aspects were missed when creating the policy, then this poses a risk as well. OPA can help you alleviate those risks. This article provides two hands-on labs explaining the process.

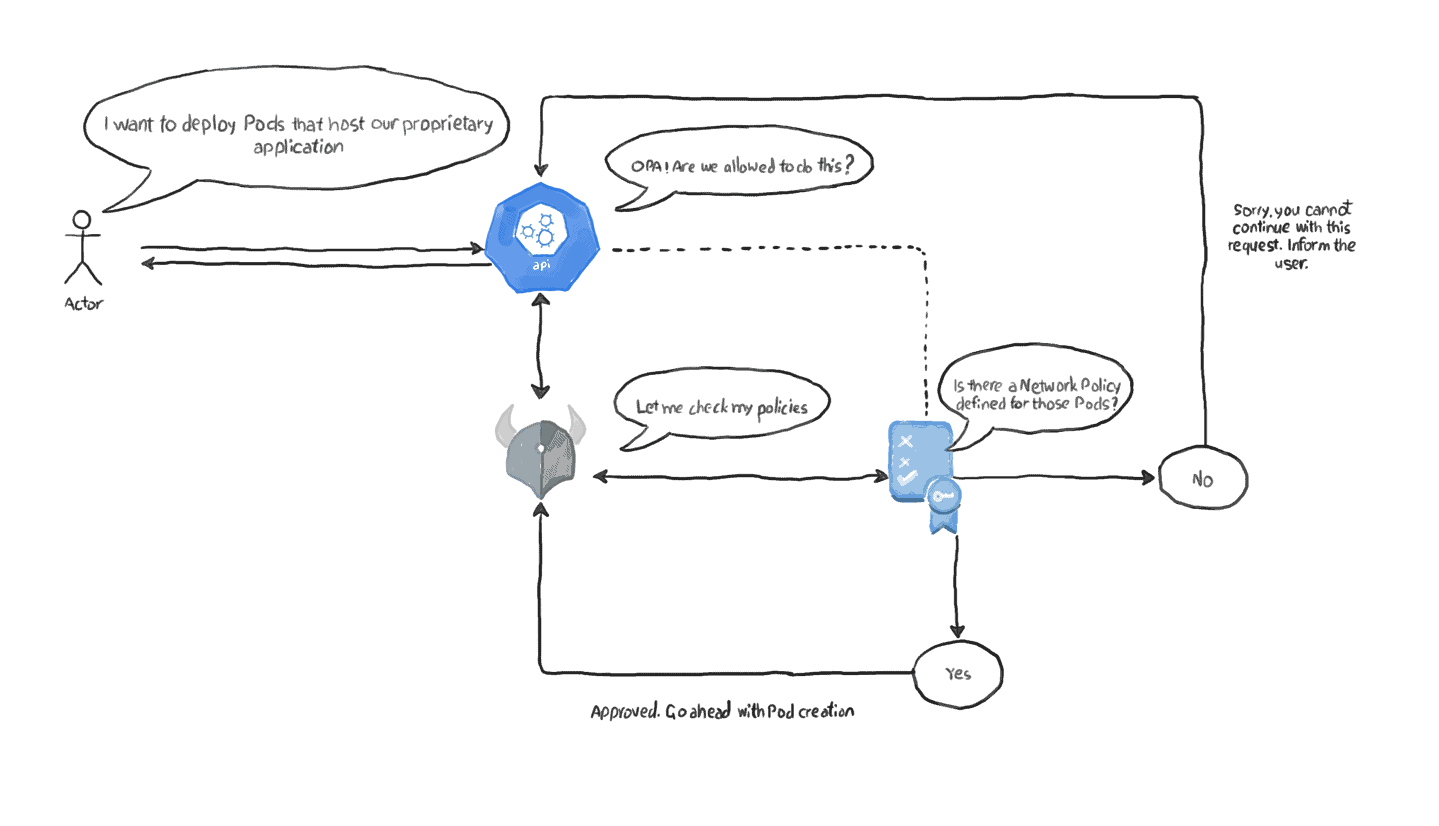

Use Case 1: Ensuring that a network policy exists prior to creating Pods

In this situation, your application pods contain proprietary code that needs increased protection. As part of your security plan, you need to ensure that no pods are allowed to access your application, except the frontend ones.

Create the network policy

You create a network policy that enforces this restriction which may look like this:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: app-inbound-policy

namespace: default

spec:

podSelector:

matchLabels:

app: prop

ingress:

- from:

- podSelector:

matchLabels:

app: frontend

ports:

- protocol: TCP

port: 80Ensure that the network policy is working as expected

Let’s set up a quick lab to ensure that our policy is indeed in place. We create a deployment that creates our protected pods. For simplicity, we’ll assume that nginx is the image used by our protected app. The deployment file may look as follows:

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: prop-deployment

namespace: default

spec:

selector:

matchLabels:

app: prop

replicas: 2

template:

metadata:

labels:

app: prop

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: prop-svc

namespace: default

spec:

selector:

app: prop

ports:

- protocol: TCP

port: 8080

targetPort: 80Apply the above definition and ensure that you have two pods running. Now let’s try to connect to the protected pods using a permitted pod. The following definition creates a pod with the allowed label that uses the alpine image:

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: client-deployment

namespace: default

spec:

selector:

matchLabels:

app: frontend

replicas: 1

template:

metadata:

labels:

app: frontend

spec:

containers:

- name: alpine

image: alpine

command:

- sh

- -c

- sleep 100000To prove that the pod created by this deployment can access our protected pods, let’s open a shell session to the container and establish an HTTP connection to the pod:

$ kubectl exec -it client-deployment-7666b46645-27psl -- sh

/ # apk add curl

/ # curl prop-svc:8080

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;So, we were able to get HTML output, which means that the connection was successful. Now, let’s create another deployment that uses different labels for the client (you can equally change the labels of the existing pods). The deployment file, for the client pod that should not be allowed access to our protected pods, should like this:

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: illegal-client-deployment

namespace: default

spec:

selector:

matchLabels:

app: backend

replicas: 1

template:

metadata:

labels:

app: backend

spec:

containers:

- name: alpine

image: alpine

command:

- sh

- -c

- sleep 100000Opening a shell session to the container and trying to connect to our target pods:

kubectl exec -it illegal-client-deployment-55b694c9df-2rznp -- sh

/ # apk add curl

/ # curl --connect-timeout 10 prop-svc:8080

curl: (28) Connection timed out after 10001 millisecondsWe used the curl’s –connect-timeout command-line option to show that the connection was not established even after ten seconds have passed. The network policy is doing what it is supposed to.

Enforcing the existence of the network security policy using OPA

1. Writing the OPA policy in Rego

So far, we’ve used the network security policy to protect our pods by limiting the connection sources. Now, what if this policy is deleted by mistake, or another replica of this cluster (perhaps in another region?) is deployed, but the deployment procedure missed creating the network policy? To avoid those risks, we can deploy an OPA policy that denies creating the app=prop-labelled pods if this network security policy was not in place. As usual, we start by writing the policy in Rego. It should look as follows:

package kubernetes.admission

import data.kubernetes.networkpolicies

# Deny with a message

deny[msg]{

input.request.kind.kind == "Pod"

pod_label_value := {v["app"] | v := input.request.object.metadata.labels} # true

contains_label(pod_label_value,"prop")

np_label_value := {v["app"] | v := networkpolicies[_].spec.podSelector.matchLabels}

not contains_label(np_label_value,"prop")

msg:= sprintf("The Pod: %v could not be created because it is missing an associated Network Security Policy.",[input.request.object.metadata.name])

}

contains_label(arr,val){

arr[_] == val

}- As usual, we start our virtual document with the kubernetes.admission package. For OPA integrations that use the kube-mgmt method, the package name is mandatory.

- Our rule is always called deny and it has a msg variable that will get populated with the reason why the policy was violated.

- Our actual policy implementation starts at line 5. Remember, Rego by default combines all the statements in the policy body with a logical AND. In other words, at least one FALSE statement will make the whole policy evaluate to FALSE, and it will not be triggered.

- In line 5, we assert that the request we are interested in is of type Pod. Otherwise, OPA will intercept any request arriving at the API server and attempt to enforce the policy on it.

- Line 6 extracts the value of the app label for the pod in question. To better understand this line, visualize the pod creating request. The labels part may look as follows:

"object": {

"kind": "Pod",

"apiVersion": "v1",

"metadata": {

"uid": "17e42367-d738-410c-af6b-f02d9a8766eb",

"creationTimestamp": "2020-05-08T21:33:57Z",

"labels": {

"app": "prop",

"pod-template-hash": "9c9cf7d47"

},We are interested in pods that host our proprietary application. They are labeled app=prop. So, we use a Rego syntax that is very similar to Python’s list comprehension. It can be read as follows:

- For each key-value pair in the labels object, extract the value of the key “app”. This ensures that we only intercept pod creation requests with the correct label. Notice that the result is an array.

- In line 7, since we need to ensure that the value “prop” is the value of label “app”, we use a helper function, contains_label (lines 12 to 14). Notice that you must use a helper function whenever you need to check for the (none) existence of an item in an array. The reason is how Rego handles negation.

- Now that we are certain that we are dealing with the correct pod, let’s move to the second part of the policy: ensuring that there is an existing Network Security Policy that handles this pod.

- To do this, we first need to obtain all the Network Security Policy objects that are currently defined in the cluster. This is done in line 2, where we use Rego’s import keyword to introduce external data.

- Next, in line 8 we iterate the Network Security Policy objects that we have, searching for app=prop label in the podSelector part of the object definition. Notice that we are using slightly the same mechanism we used with Pods, earlier.

- Line 9 checks whether a Network Policy object with the mentioned labels was not found. It uses the same helper function but this time with the not keyword for negation. Remember, OPA policies are triggered when they evaluate to True, so if the object does not exist, this will violate the policy.

- Finally, we prepare the message that users should see when trying to create app=prop pods that do not have a Network Policy defined for them.

2. Modifying kube-mgmt to acquire extra data

Before we go ahead and deploy our OPA policy we need to instruct our kube-mgmt sidecar container to obtain the list of Network Policy objects defined in the cluster so that we can import it in the policy. This task is as simple as modifying the OPA deployment (please refer to our previous article on how to integrate Kubernetes with OPA using kube-mgmt) so that the kubemgmt container definition looks as follows:

- name: kube-mgmt

image: openpolicyagent/kube-mgmt:0.8

args:

- "--replicate-cluster=networking.k8s.io/v1/networkpolicies"The syntax of the object you want to replicate is simple:

The API type + / + the object name in a small case and in the plural. For more information, you may want to refer to kube-mgmt documentation. Wait until the pods are terminated and recreated after you modify the deployment, before proceeding.

3. Deploying the OPA policy

Deploying OPA policies in Kubernetes is as easy as creating a ConfigMap in the opa namespace. Let’s do that:

kubectl create configmap ensure-nap-existence --from-file=ensure-nap-exists.regoIt’s always a good practice to check that your policy was acquired by OPA with no syntax errors. You can do this by checking the status of the ConfigMap. I prefer having the output in JSON and using jq to parse it. But you can use whatever method you like:

kubectl get cm ensure-nap-existence -o json | jq '.metadata.annotations'

{

"openpolicyagent.org/policy-status": "{\"status\":\"ok\"}"The status is OK. Now, let’s exercise our policy.

4. Ensuring that the OPA policy works

To test policy execution, we delete the Network Policy that we already defined and the Deployment. Then we recreate the Deployment without recreating the Network Policy:

$ kubectl delete deployments prop-deployment -n default

$ kubectl delete networkpolicies app-inbound-policy -n default

$ kubectl apply -f prop-deployment.yaml

deployment.apps/prop-deployment created

service/prop-svc unchanged

$ kubectl get pod -n default

NAME READY STATUS RESTARTS AGE

client-deployment-7666b46645-27psl 1/1 Running 0 18h

illegal-client-deployment-55b694c9df-2rznp 1/1 Running 0 18hAs you can see from the output, we do not have any prop pods. Let’s probe the Deployment status messages to learn why the pods were not created:

$ kubectl get deployments prop-deployment -n default -o json | jq '.status.conditions[].message'

"Created new replica set \"prop-deployment-9c9cf7d47\""

"Deployment does not have minimum availability."

"admission webhook \"validating-webhook.openpolicyagent.org\" denied the request: The Pod: prop-deployment-9c9cf7d47-qd74j could not be created because it is missing an associated Network Security Policy."So, the last message states the reason: we do not have a Network Policy that protects those pods and they will not be deployed unless one is created.

Let’s have another test in which we do create a Network Policy, but it does not watch the pods labeled app=prop. Our definition file for this object may look as follows:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: app-inbound-policy2

namespace: default

spec:

podSelector:

matchLabels:

app: backend

ingress:

- from:

- podSelector:

matchLabels:

app: frontend

ports:

- protocol: TCP

port: 80This policy is concerned with restricting access to app=backend pods to be only coming from the app=frontend labels. Clearly, this policy has nothing to do with our app=prop pods. Let’s see what happens when we apply this policy, and delete and recreate our Deployment.

$ kubectl apply -f nap2.yaml

networkpolicy.networking.k8s.io/app-inbound-policy2 created

$ kubectl delete deployments prop-deployment -n default

deployment.apps "prop-deployment" deleted

$ kubectl apply -f prop-deployment.yaml

deployment.apps/prop-deployment created

service/prop-svc unchanged

$ kubectl get pods -n default

NAME READY STATUS RESTARTS AGE

client-deployment-7666b46645-27psl 1/1 Running 0 18h

illegal-client-deployment-55b694c9df-2rznp 1/1 Running 0 18h

$ kubectl get deployments prop-deployment -n default -o json | jq '.status.conditions[].message'

"Created new replica set \"prop-deployment-9c9cf7d47\""

"Deployment does not have minimum availability."

"admission webhook \"validating-webhook.openpolicyagent.org\" denied the request: The Pod: prop-deployment-9c9cf7d47-jkv6v could not be created because it is missing an associated Network Security Policy."We get the same result. Finally, let’s create the Network Policy that controls app=prop pods (defined earlier in the article), delete and recreate the Deployment, and observe what happens:

$ kubectl apply -f network-policy.yaml

networkpolicy.networking.k8s.io/app-inbound-policy created

$ kubectl delete deployments prop-deployment -n default

deployment.apps "prop-deployment" deleted

$ kubectl apply -f prop-deployment.yaml

deployment.apps/prop-deployment created

service/prop-svc unchanged

$ kubectl get pods -n default

NAME READY STATUS RESTARTS AGE

client-deployment-7666b46645-27psl 1/1 Running 0 18h

illegal-client-deployment-55b694c9df-2rznp 1/1 Running 0 18h

prop-deployment-9c9cf7d47-2dt96 1/1 Running 0 4s

prop-deployment-9c9cf7d47-lvlmr 1/1 Running 0 4sThe deployment was created successfully. We have two prop pods running. OPA allowed this action because there is a Network Policy in place that the pods in question.

Already working in production with Kubernetes? Want to know more about kubernetes application patterns?

👇👇

Download Kubernetes Application Patterns E-Book

Use Case 2: Validating and enforcing the network policy rules

In the previous example, we addressed the risk of having pods hosting a security-sensitive application getting deployed with no Network Policy. However, if you take a close look at the OPA policy we demonstrated, you’ll see that it only ensures the existence of a Network Policy that scopes app=prop-labeled pods. But take a look at the following Network Access policy:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: risky-policy

namespace: default

spec:

podSelector:

matchLabels:

app: prop

ingress:

- from:

- podSelector:

matchLabels:

tier: test

ports:

- protocol: TCP

port: 80Now, although this object would satisfy our OPA policy restriction, since it matches app: prop labels, it does not fulfill our purpose – to allow connections to the proprietary-app pods only from pods labeled app=frontend. Instead, it allows connections from pods labeled tier=test. To address this risk, we create a second OPA policy that ensures that the Network Policy object serves our ultimate requirement.

1. Writing the OPA policy in Rego

Our policy file should look as follows:

package kubernetes.admission

# Deny with a message

deny[msg]{

ensure

count(input.request.object.spec.ingress)

msg:= sprintf("The Network Policy: %v could not be created because it violates the proprietary app security policy.",[input.request.object.metadata.name])

}

ensure {

# When the requested object is a NetworkPolicy

input.request.kind.kind == "NetworkPolicy"

# and it is controlling our protected pods (those labelled app=prop)

input.request.object.spec.podSelector.matchLabels["app"] == "prop"

# get the pod label values when they key is "app" from the list of labels that this policy controlls ingress connections to

values := {v["app"] | v:= input.request.object.spec.ingress[_].from[_].podSelector.matchLabels}

# if we do not have "app=frontend" as the allowed value, this policy is violated

not exists(values,"frontend") # false because we have frontend

}

# A hepler function to test the eixstence of the label value

exists(arr,elem) {

arr[_] == elem

}

ensure {

# Ensure that we only have one policy in the ingress

1 != count(input.request.object.spec.ingress) # false because we have just one ingress

}

ensure {

# Ensure that we only have one policy in the ingress

1 != count([from | from := input.request.object.spec.ingress[_].from]) # should be false because we have more than one from

}We added comments where we could to make the code self explanatory. However, there are still some points that need clarification:

Line 9 calls another policy (not a function) called ensure. The reason we are using another policy to write our conditions and not have everything in the deny policy is that we need to combine multiple conditions with the logical OR instead of AND. To explain this better, consider the following restrictions that we need all of them applied:

- We are watching objects of kind NetworkPolicy (line 11) that are scoping pods labeled app=prop (lines 13 to 17).

- We are not allowing more than one ingress rule to avoid granting additional other pods access to our prop app (lines 25-28)

- For the same reason, we are disallowing additional from stanzas.

As you can see, we need the policy if any of the above are True, and not if all of them are True. To achieve that in Rego, we create multiple policies with the same name and make the call in the main policy (deny).

2. Deploying the OPA policy

Again, deploying the OPA policy is as simple as creating the ConfigMap in the opa namespace:

$ kubectl create configmap enforce-correct-nap --from-file=enforce-correct-nap.rego

configmap/enforce-correct-nap createdWe won’t add the policy validation step for brevity, but it’s recommended that you always validate your policies as described earlier.

3. Ensuring that the OPA policy works

To confirm that our OPA policy is effective, let’s try to deploy the risky Network Policy that we demonstrated earlier:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: risky-policy

namespace: default

spec:

podSelector:

matchLabels:

app: prop

ingress:

- from:

- podSelector:

matchLabels:

tier: test

ports:

- protocol: TCP

port: 80kubectl apply -f nap2.yaml

Error from server (The Network Policy: risky-policy could not be created because it violates the proprietary app security policy.): error when creating "nap2.yaml": admission webhook "validating-webhook.openpolicyagent.org" denied the request: The Network Policy: risky-policy could not be created because it violates the proprietary app security policy.So, we are not allowed to create a Network Policy that scopes our prop pods and accepts incoming connection from anywhere except app=frontend.

Finally, delete and recreate our original Network Policy to ensure that we are allowed to deploy it. For a refresher, it looked as follows:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: app-inbound-policy

namespace: default

spec:

podSelector:

matchLabels:

app: prop

ingress:

- from:

- podSelector:

matchLabels:

app: frontend

ports:

- protocol: TCP

port: 80$ kubectl apply -f network-policy.yaml

networkpolicy.networking.k8s.io/app-inbound-policy createdWith the two policies in place, we cannot create pods with app=prop label unless we have the Network Policy that protects them also in place. Additionally, we cannot create that Network Policy unless it contains the required protection rules.

TL;DR

- OPA is a general-purpose, platform-agnostic policy enforcement tool.

- This means that OPA does not work only with Kubernetes but with many other technologies and products.

- OPA works simply by intercepting the API call and validating it against the rules stored in memory.

- When integrated with Kubernetes, OPA is deployed as an admission controller. It intercepts calls to the API server to create, modify or delete a resource. Then, it validates the request based on the policies that it already has. Depending on the result, the API call may proceed to persist the object, or the request would be denied and – optionally – a descriptive message is sent to the user with the reason for rejection.

- OPA policies are written in Rego, a language that was designed specifically for this purpose.

- Rego is essentially different from other programming languages. For example, you are allowed to define several functions with the same name and signature but with a different body. Also, the way Rego handles iteration over arrays is uncommon.

- Despite that, Rego borrows some powerful features from other languages, like list comprehensions from Python.

- With Rego, you can validate any part of the JSON object of the API request. You can also import external data from Kubernetes and use it in the validation process.

- We strongly encourage you to use the Rego Playground to test and validate your own policies before applying them.

To fast-track your adoption of policy as code with OPA, check out Magalix KubeAdvisor and its simple markdown interface for Open Policy Agent, and try a 14-day free trial.