Originally published on the Epsagon blog by Ran Ribenzaft, co-founder and CTO at Epsagon

This article will discuss a few of the frameworks mentioned above and will go deep into OpenFaaS and Knative to present their architecture, main components, and basic installation steps. If you are interested in this topic and plan to develop serverless applications using open-source platforms, this article will give you a better understanding of these solutions.

Over the past few years, serverless architectures have been rapidly gaining in popularity. The main advantage of this technology is the ability to create and run applications without the need for infrastructure management. In other words, when using a serverless architecture, developers no longer need to allocate resources, scale and maintain servers to run applications, or manage databases and storage systems. Their sole responsibility is to write high-quality code.

There have been many open-source projects for building serverless frameworks (Apache OpenWhisk, IronFunctions, Fn from Oracle, OpenFaaS, Kubeless, Knative, Project Riff, etc). Moreover, due to the fact that open-source platforms provide access to IT innovations, many developers are interested in open-source solutions.

OpenWhisk, Firecracker & Oracle FN

Before delving into OpenFaaS and Knative, let’s briefly describe these three platforms.

Apache OpenWhisk is an open cloud platform for serverless computing that uses cloud computing resources as services. Compared to other open-source projects (Fission, Kubeless, IronFunctions), Apache OpenWhisk is characterized by a large codebase, high-quality features, and the number of contributors. However, the overly large tools for this platform (CouchDB, Kafka, Nginx, Redis, and Zookeeper) cause difficulties for developers. In addition, this platform is imperfect in terms of security.

Firecracker is a virtualization technology introduced by Amazon. This technology provides virtual machines with minimal overhead and allows for the creation and management of isolated environments and services. Firecracker offers lightweight virtual machines called micro VMs, which use hardware-based virtualization technologies for their full isolation while at the same time providing performance and flexibility at the level of conventional containers. One of the inconveniences for developers is that all the developments of this technology are written in the Rust language. A truncated software environment with a minimum set of components is also used. To save memory, reduce startup time, and increase security in environments, a modified Linux kernel is launched from which all the superfluous things have been excluded. In addition, functionality and device support are reduced. The project was developed at Amazon Web Services to improve the performance and efficiency of AWS Lambda and AWS Fargate platforms.

Oracle Fn is an open-server serverless platform that provides an additional level of abstraction for cloud systems to allow for Functions as Services (FaaS). As in other open platforms in Oracle Fn, the developer implements the logic at the level of individual functions. Unlike existing commercial FaaS platforms, such as Amazon AWS Lambda, Google Cloud Functions, and Microsoft Azure Functions, Oracle’s solution is positioned as having no vendor lock-in. The user can choose any cloud solution provider to launch the Fn infrastructure, combine different cloud systems, or run the platform on their own equipment.

Kubeless is an infrastructure that supports the deployment of serverless functions in your cluster and enables us to execute both HTTP and event switches in your Python, Node.js, or Ruby code. Kubeless is a platform that is built using Kubernetes’ core functionality, such as deployment, services, configuration cards (ConfigMaps), and so on. This saves the Kubeless base code with a small size and also means that developers do not have to replay large portions of the scheduled logic code that already exists inside the Kubernetes kernel itself.

Fission is an open-source platform that provides a serverless architecture over Kubernetes. One of the advantages of Fission is that it takes care of most of the tasks of automatically scaling resources in Kubernetes, freeing you from manual resource management. The second advantage of Fission is that you are not tied to one provider and can move freely from one to another, provided that they support Kubernetes clusters (and any other specific requirements that your application may have).

Main Benefits of Using OpenFaaS and Knative

OpenFaaS and Knative are publicly available and free open-source environments for creating and hosting serverless functions. These platforms allow you to:

- Reduce idle resources.

- Quickly process data.

- Interconnect with other services.

- Balance load with intensive processing of a large number of requests.

However, despite the advantages of both platforms and serverless computing in general, developers must assess the application’s logic before starting an implementation. This means that you must first break the logic down into separate tasks, and only then can you write any code.

For clarity, let’s consider each of these open-source serverless solutions separately.

How to Build and Deploy Serverless Functions With OpenFaaS

The main goal of OpenFaaS is to simplify serverless functions with Docker containers, allowing you to run complex and flexible infrastructures.

OpenFaas Design & Architecture

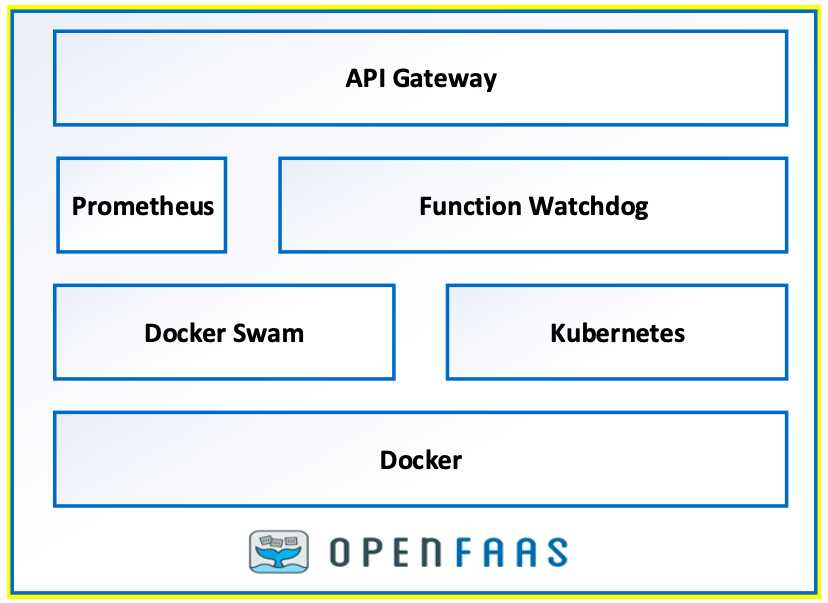

OpenFaaS architecture is based on a cloud-native standard and includes the following components: API Gateway, Function Watchdog, and the container orchestrators Kubernetes, Docker Swarm, Prometheus, and Docker. According to the architecture shown below, when a developer works with OpenFaaS, the process begins with the installation of Docker and ends with the Gateway API.

API Gateway

Through the API Gateway, a route to the location of all functions is provided, and cloud-native metrics are collected through Prometheus.

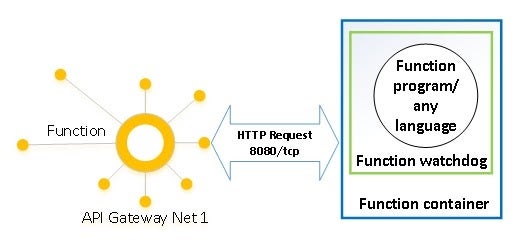

Function Watchdog

A Watchdog component is integrated into each container to support a serverless application and provides a common interface between the user and the function.

One of the main tasks of Watchdog is to organize an HTTP request received on the API Gateway and call the selected application.

Prometheus

This component allows you to get the dynamics of metric changes at any time, compare them with others, convert them, and view them in text format or in the form of a graph without leaving the main page of the web interface. Prometheus stores the collected metrics in RAM and saves them to a disk upon reaching a given size limit or after a certain period of time.

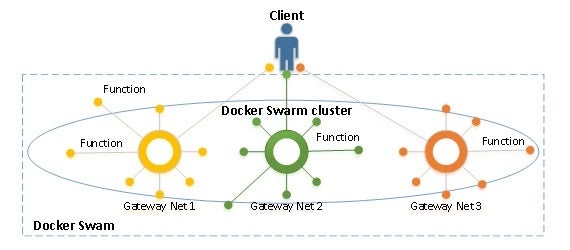

Docker Swarm and Kubernetes

Docker Swarm and Kubernetes are the engines of orchestration. Components such as the API Gateway, the Function Watchdog, and an instance of Prometheus work on top of these orchestrators. It is recommended to use Kubernetes to develop products, while Docker Swarm is better to create local functions.

Moreover, all developed functions, microservices, and products are stored in the Docker container, which serves as the main OpenFaaS platform for developers and sysadmins to develop, deploy, and run serverless applications with containers.

The Main Points for Installation of OpenFaaS on Docker

The OpenFaaS API Gateway relies on the built-in functions provided by the selected Docker orchestrator. To do this, the API Gateway connects to the appropriate plugin for the selected orchestrator, records various function metrics in Prometheus, and scales functions based on alerts received from Prometheus through AlertManager.

For example, say you are working on a machine with Linux OS and want to write one simple function on one node of a Docker cluster using OpenFaaS. To do this, you would just follow the steps below:

- Install Docker CE 17.05 or the latest version.

- Run Docker:

$ docker run hello-world

- Initialize Docker Swarm:

$ docker swarm init

- Clone OpenFaaS from Github:

git clone https://github.com/openfaas/faas && \ cd faas && \ ./deploy_stack.sh

- Login to UI portal at http://127.0.0.1:8080.

Docker is now ready for use, and you no longer have to install it when writing further functions.

Preparing CLI OpenFaaS for Building Functions

To develop a function, you need to install the latest version of the command line using a script. For brew, this would be $ brew install faas-cli. For curl, you would use $ curl -sL https://cli.get-faas.com/ | sudo sh.

Different Program Languages With OpenFaas

To create and deploy a function with OpenFaaS using templates in the CLI, you can write a handler in almost any programming language. For example:

- Create new function:

$ faas-cli new --lang prog language <<function name>>

- Generate stack file and folder:

$ git clone https://github.com/openfaas/faas \ cd faas \ git checkout 0.6.5 \ ./deploy_stack.sh

- Build the function:

$ faas-cli build -f <<stack file>> Deploy the function: $ faas-cli deploy -f <<stack file>>

Testing the Function From OpenFaaS UI

You can quickly test the function in several ways from the OpenFaas user interface, as shown below:

- Go to OpenFaaS UI:

http://127.0.0.1:8080/ui/

- Use curl:

$ curl -d "10" http://localhost:8080/function/fib

- Use the UI

At first glance, everything probably seems quite simple. However, you still have to deal with many nuances. This is especially the case if you have to work with Kubernetes, require many functions, or need to add additional dependencies to the FaaS main code base.

There is an entire community of OpenFaas developers on GitHub where you can find useful information as well.

Benefits and Disadvantages of OpenFaaS

OpenFaaS simplifies the building of the system. Fixing errors becomes easier, and adding new functionality to the system is much faster than in the case of a monolithic application. In other words, OpenFaaS allows you to run code in any programming language anytime and anywhere.

However, there are drawbacks:

- Lengthy cold-start time for some programming languages.

- Container startup time depends on the provider.

- Limited lifetime of the function, meaning not all systems can work according to Serverless. (When using OpenFaaS, computing containers cannot store executable application code in memory for a long time. The platform will create and destroy them automatically, so a stateless state is not possible.)

Deploying and Running Functions With Knative

Knative allows you to develop and deploy container-based server applications that you can easily port between cloud providers. Knative is an open-source platform that is just starting to gain popularity but is of great interest to developers today.

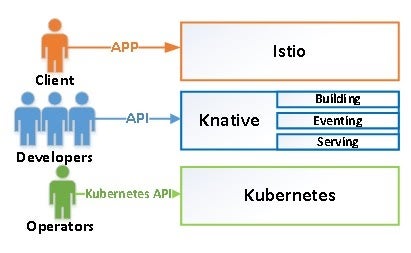

Architecture and Components of Knative

The Knative architecture consists of the Building, Eventing, and Serving components.

Building

The Building component of Knative is responsible for ensuring that container assemblies in the cluster are launched from the source code. This component works on the basis of existing Kubernetes primitives and also extends them.

Eventing

The Eventing component of Knative is responsible for universal subscription, delivery, and event management as well as the creation of communication between loosely coupled architecture components. In addition, this component allows you to scale the load on the server.

Serving

The main objective of the Serving component is to support the deployment of serverless applications and features, automatic scaling from scratch, routing and network programming for Istio components, and snapshots of the deployed code and configurations. Knative uses Kubernetes as the orchestrator, and Istio performs the function of query routing and advanced load balancing.

Example of the Simplest Functions With Knative

You can use several methods to create a server application on Knative. Your choice will depend on your given skills and experience with various services including Istio, Gloo, Ambassador, Google, and especially Kubernetes Engine, IBM Cloud, Microsoft Azure Kubernetes Service, Minikube, and Gardener.

Simply select the installation file for each of the Knative components. Links to the main installation files for the three components required can be found here below:

Each of these components is characterized by a set of objects. More detailed information about the syntax and installation of these components can be found on Knative’s own development site.

Benefits and Disadvantages of Knative

Knative has a number of benefits. Like OpenFaaS, Knative allows you to create serverless environments using containers. This in turn allows you to get a local event-based architecture in which there are no restrictions imposed by public cloud services. Knative also lets you automate the container assembly process, which provides automatic scaling. Because of this, the capacity for serverless functions is based on predefined threshold values and event-processing mechanisms.

In addition, Knative, allows you to create applications internally, in the cloud, or in a third-party data center. This means that you are not tied to any one cloud provider. And due to its operation being based on Kubernetes and Istio, Knative has a higher adoption rate and greater adoption potential.

One main drawback of Knative is the need to independently manage container infrastructure. Simply put, Knative is not aimed at end users. However, because of this, more commercially managed Knative offers are becoming available, such as the Google Kubernetes Engine and Managed Knative for the IBM Cloud Kubernetes Service.

Conclusion

Despite the growing number of open-source serverless platforms, OpenFaaS and Knative will continue to gain popularity among developers. It is worth noting that these platforms can not be easily compared because they are designed for different tasks.

Unlike OpenFaas, Knative is not a full-fledged serverless platform, but it is better positioned as a platform for creating, deploying, and managing serverless workloads. However, from the point of view of configuration and maintenance, OpenFaas is simpler. With OpenFaas, there is no need to install all components separately as with Knative, and you don’t have to clear previous settings and resources for new developments if the required components have already been installed.

Still, as mentioned above, a significant drawback of OpenFaaS is that the container launch time depends on the provider, while Knative is not tied to any single cloud solution provider. Based on the pros and cons of both, organizations may also choose to use Knative and OpenFaaS together to effectively achieve different goals.