Guest post by Fei Guo and Lei Zhang of Alibaba

In this guest post, the Kubernetes team from Alibaba will share how they are building hard multi-tenancy on top of upstream Kubernetes by leveraging a group of plugins named “Virtual Cluster” and extending tenant design in the community. The team has decided to open source the these K8s plugins and contribute them to Kubernetes community in the upcoming KubeCon.

Introduction

In Alibaba, the internal Kubernetes team is using one web-scale cluster to serve large number of business units as end users. In this case, every end user actually become a “tenant” to this K8s cluster which makes hard multi-tenancy as a strong need.

However, instead of hacking Kubernetes APIServer and resource model, the team in Alibaba tried to build a “Virtual Cluster” multi-tenancy layer without changing any code of Kuberentes. With this architecture, every tenant will be assigned a dedicated K8s control plane (kube-apiserver + kube-controller-manager) and several “Virtual Node” (pure Node API object but no corresponding kubelet) so there’s no worries for naming or node conflicting at all, while the tenant workloads are still mixed running in the same underlying “Super Cluster” so resource utilization is guaranteed. This design is detailed in [virtual cluster proposal] which has received lots of feedback.

Although a new concept of “tenant master” is introduced in this design, virtual cluster is simply an extension built on top of the existing namespace based multi-tenancy in K8s community, which is referred to as “namespace group” in the rest of the document. Virtual cluster fully relies on the resource isolation mechanisms proposed by namespace group, and we are eagerly expecting and pushing them to be addressed in the on-going efforts of Kubernetes WG-multitenancy.

If you want to know more details about Virtual Cluster design, please do not hesitate to read the [virtual cluster proposal] , while in this document, we will focus on the high level idea behind virtual cluster and elaborating how we extend the namespace group with “tenant cluster” view and why this extension is valuable to Kubernetes multi-tenancy use cases.

Background

This section briefly reviews the architecture of namespace group multi-tenancy proposal.

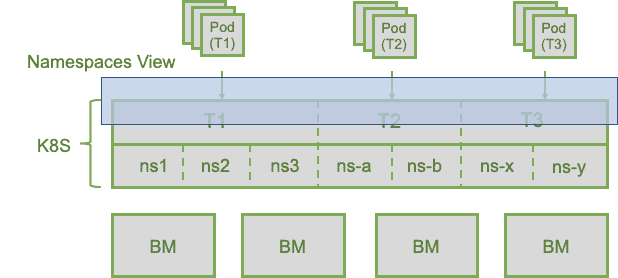

We borrow a diagram from the K8s Multi-tenancy WG Deep Dive presentation, as shown Figure1, to explain the high level idea of using namespaces to organize tenant resources.

Figure 1. Namespace group multi-tenancy architecture

In namespace group, all tenant users share the same access point, the K8s apiserver, to utilize the tenant resource. Their accounts, assigned namespaces and resource isolation policies are all specified in tenant CRD objects, which are managed by tenant admin. Tenant user view is limited in the per tenant namespaces. The tenant resource isolation policies are defined to disable the direct communication between tenants and to protect tenant Pods from security attacks. They are realized by native Kubernetes resource isolation mechanisms including RBAC, Pod security policy, network policy, admission control and sandbox runtime. Multiple security profiles can be configured and applied for different levels of isolation requirements. In addition, resource quotas, chargeback and billing happen at tenant level.

How virtual cluster extends the view layer

Conceptually, virtual cluster provides a view layer extension on top of the namespace group solution. Its technical details can be found in [virtual cluster]. In virtual cluster, tenant admin still needs to use the same tenant CRD used in namespace group to specify the tenant user accounts, namespaces and resource isolation policy in the tenant resource provider, i.e., the super master.

Limitations

Since virtual cluster mainly extends the multi-tenancy view option and prevents problems due to sharing apiserver from happening, it inherits the same limitations/challenges faced by namespace group solution in making kubernetes node components tenant-aware. The node components need to be enhanced include but not limited to:

- Kubelet and CNI-plugin. They need to be tenant-aware to support strong network isolation scenarios like VPC.

- For example, how readiness/liveness probe works if a pod is isolated in different VPC from the node? This is one of the issues we’ve already started to cooperate with SIG-Node on upstream.

- Kube-proxy/Kube-dns. They need to be tenant-aware to make cluster-IP type of tenant services work.

- Tools: For example, monitoring tools should be tenant-aware to avoid leaking tenant information. Performance tuning tools should be tenant-aware to void unexpected performance interference between tenants.

Of course, virtual cluster needs extra resources to run tenant master for each tenant which may not be affordable in some cases.

Conclusions

Virtual cluster extends the namespace group multi-tenancy solution with a user friendly cluster view. It leverages the K8s under-line resource isolation mechanisms and existing Tenant CRD & controller in community, but provides uses with experience of dedicated tenant cluster. Overall, we believe virtual cluster together with namespace based multi-tenancy can offer comprehensive solutions for various Kubernetes multi-tenancy use cases in production clusters, and we are actively working on contributing this plugin to the upstream community.

See ya at KubeCon!