By Randy Abernethy, Managing Partner at RX-M, LLC

Randy Abernethy is a Tech Entrepreneur, coder, startup adviser, financial technology pioneer, Apache Thrift committer, author and highly experienced Destiny guardian.

Curiously reoccurring things

This is an article about the Curiously Reoccurring Communications Pattern, CRCP[1] for short. The CRCP name was inspired by the C++ CRTP[2], the “Curiously Reoccurring Template Pattern”, a C++ coding pattern identified in 1995 by Jim Coplien.

While the CRTP and CRCP operate in different spheres they are both curious and reoccurring. They are curious because they are non-obvious answers to important architectural questions. People keep reinventing these patterns only to discover that they are already known amongst the cognoscenti. Patterns like these can offer a valuable roadmap to help new architects overcome common challenges.

While the CRTP has its own Wikipedia page, the Curiously Reoccurring Communications Pattern is more or less an RX-M in-house invention. Therefore, we’ll leave it to you to Google the CRTP and spend our time here focusing on the merits of less well-known CRCP.

The CRCP

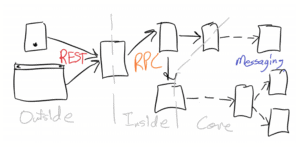

The CRCP is a large scale communications pattern found in the architectural fabric of many distributed applications. In the CRCP, frontend components communicate with the backend using RESTful service interfaces, synchronous backend activities are performed using RPC, and the Core of the backend communicates asynchronously over a messaging fabric. Something like this:

Each communications technology offers the perfect blend of features and function for the subsystem in which it is found. We’ll examine each in turn, working our way from the outside in.

The RESTful outside

Like perhaps most modern systems, the “outside” part of a CRCP system operates over the Internet and makes use of the REST[3] architectural pattern. RESTful APIs extract maximum value from the underlying and ubiquitous HTTP protocol. They GET free use of browser and proxy caches all over the world, graciously paid for by the users, not the API developers. HTTP also provides RESTful services with clean separation between platform level directives (headers) and application level communications (verbs, IRIs, status, and bodies). Drop in HTTP/2 and the whole thing goes a lot faster at no extra charge (technical or otherwise). Authentication schemes, powerful HTTP aware gateways, native browser functionality, the list of RESTful benefits goes on. If you want to leverage the global infrastructure of the Web, there’s likely no better choice than REST for your API.

RESTful APIs are also the most likely thing one would expose to partners and engineered client systems, where the ubiquity and tool-less nature of RESTful interfaces are a significant plus. RESTful interfaces exhibit a Resource- Oriented Architecture (ROA), being decomposed into resources and operations on those resources, typically making these APIs easier for counterparties to navigate and understand.

Clearly, there’s a lot to like about REST, so why consider anything else?

The RPC inside

The world changes considerably when we enter the realm of the backend service. Whether in the cloud or in a traditional on-premises data center, the nature of application decomposition in the backend tends toward smaller services, fewer bits of Web infrastructure and a single organizational view. Take away the Web and the need for cross-org adoption and you take away much of the RESTful value proposition.

Another consideration in a modern cloud native environment is application migration. If you are moving from a large, monolithic, traditional system to microservices, it is a pretty good bet that your monoliths do not have REST APIs internally. Rather they have functions and methods. Monolith functions and methods can be repackaged as RPC services in short order, however, migrating the same interface to a resource-oriented API environment like REST is a significant engineering undertaking impacting clients and servers alike.

Also worth considering is the heightened need for performance on the backend. Microservice oriented systems, in particular, are likely to require many backend calls to satisfy a single frontend request. For example, Netflix has noted in talks on their open source Zuul gateway, that in one analyzed setting, each Internet call typically triggers 6-7 backend calls. Whether the number is 3 or 20, latency in the call chain could quickly add up.

Backend services in the synchronous call path of internet callers affect the perceived responsiveness of the application in question. Thus the cumulative latency of these inside services could become a user experience problem if not managed. Fortunately, high-performance Remote Procedure Call (RPC) systems are available to address this concern.

CNCF’s gRPC[4] and Apache’s Thrift[5] are both cross-platform RPC systems and both are regularly clocked at rates an order of magnitude faster than the functionally equivalent service using a REST interface. These “Modern RPC” systems also support interface evolution, allowing you to add methods and parameters without rebuilding old clients. Both also support cross-language calls, supporting every programming languages in widespread commercial use today.

Nearly all of the hyperscale firms have a history of RPC innovation and adoption. For example, Google invented Protocol Buffers[6] (the serialization system under gRPC), Facebook followed with Thrift (now Apache Thrift), and Twitter created the Scala based Finagle[7] system (which can operate over Thrift). Each of these companies uses their respective RPC system across vast swaths of their in-house platforms to reduce latency and increase throughput.

Neither gRPC nor Apache Thrift requires an application server, instead, they offer integral lightweight RPC servers in each of the languages they support. Application servers offer many valuable features but in a world where services are atomically packaged and deployed, perhaps multiple times on the same node, placing an entire application server in a container to host one small microservice can amount to undesired overhead and additional latency.

So there’s a lot to like about modern RPC in a cloud native system backend. Whether your priority is brownfield monolith migration, low latency or efficient containerization, modern RPC solutions can fit the bill. However, while RPC solutions can speed up the synchronous application backend they are not perfect for asynchronous operations or event-driven environments.

The messaging core

In many applications, things at some point stop being synchronous. For example, if a mobile user submits an order for 100 shares of SuperMega to a trading system, validating the order and enriching it may occur in the synchronous RPC space. However, sending the order to a stock market and waiting for it to execute clearly needs to take place in the background. Decoupling subsystems with widely varying processing times is a job for messaging.

Another critical aspect of many environments is the fact that each message represents a new element of system state. Messaging allows us to embrace “event sourcing”[8], freezing and logging this state as it enters the system. These little state deltas can then be distributed to a wide range of services that may want to act independently on them. Auditing services, client notification services, risk analytics services, and more can all operate at their own pace in parallel as the data arrives.

Publish and subscribe systems allow message consuming services to be scaled out to process messages at extreme throughput rates. Messages can also be captured and replayed to repro bugs, runs tests, train ML systems and so on. There is no better way to unlock parallel activities and unencumber innovation at the heart of a system than messaging.

A small group of cluster based, cloud native messaging platforms have found their way into next-generation applications. In particular, Apache’s Kafka[9] and CNCF’s NATS[10], both high profile examples of messaging systems that can scale to the level demanded by large microservice systems. Both Kafka and NATS are frequently referred to as “central nervous systems” for applications.

It is easy to assume that the significant difference in processing models makes defining interfaces for messaging systems and RPC systems unique tasks. This, however, need not be the case.

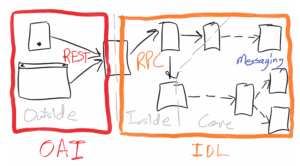

Interface definition languages

Valuable synergies can be harvested when using RPC and Messaging together. For example, IDL (Interface Definition Language) based systems, like Protocol Buffers and Apache Thrift, make it easy to describe messages along with service interfaces. This allows one to serialize messages across a wide range of languages for use with RPC and messaging systems alike, lending the fast, efficient serialization of Protocol Buffers or Apache Thrift to the RPC and messaging world in a single IDL solution.

It is a grave mistake think of the messages passed through a system as anything other than a key manifestation of an API contract. Protocol Buffers and Thrift allow you to evolve these message contracts without breaking existing systems by, for example, using collections and adding/removing attributes to messages. In short, there’s a lot of value to harvest from the IDL tools and serialization engines of RPC systems when messaging.

While IDL provides machine-readable documentation for RPC and messaging contracts, the need to document RESTful service contracts is also critical, particularly given their external facing nature. RESTful API definition requires an ROA aligned solution, like the Swagger based OpenAPI Initiative (OAI)[11].

Edge services may need to expose RESTful interfaces while consuming RPC interfaces. Fortunately, there are tools that make these transitions easier. For example, Apache Thrift can serialize any IDL defined entity to/from JSON, making these types easy to exchange with the world of the Web.

Summary

So while the CRCP is conceptually fairly high level and not suitable for all applications, it does occur with curious frequency in large-scale distributed systems. Even if all of the pieces are not a fit for your use case, understanding the CRCP elements and their motivations may bring some value when thinking about the right communications patterns and tools for your next cloud native endeavor.

Footnotes

- CRCP – Curiously Reoccurring Communication Pattern, a term invented at RX-M and the subject of this blog

- CRTP – Curiously Reoccurring Template Pattern is an idiom in C++ in which a class X derives from a class template instantiation using X itself as template argument, more generally known as F-bound polymorphism, a form of F-bounded quantification, https://en.wikipedia.org/wiki/Curiously_recurring_template_pattern

- REST – Representational State Transfer, an architectural style wherein resources are identified by routes and interacted with via HTTP verbs like GET, POST, PUT, DELETE and PATCH, https://en.wikipedia.org/wiki/Representational_state_transfer

- gRPC – a high performance, HTTP/2 based cross platform RPC system, https://grpc.io/

- Apache Thrift – a high performance, pluggable, cross platform RPC and serialization system, https://thrift.apache.org/

- Protocol Buffers – a compact and efficient cross platform message serialization system, https://developers.google.com/protocol-buffers/

- Finagle – Finagle is an extensible RPC system for the JVM used to construct high-concurrency servers, https://twitter.github.io/finagle/

- Event Sourcing – a pattern wherein state is managed as an immutable set of events occurring within a system or delivered to a system from an external source, https://docs.microsoft.com/en-us/azure/architecture/patterns/event-sourcing

- Kafka – a highly scalable distributed transaction log, https://kafka.apache.org/

- NATS – a highly scalable message distribution system, https://nats.io/

- OpenAPI Initiative – the swagger based open source and open governance based foundation focused on developing open standards for documenting RESTful interface contracts, https://www.openapis.org/